If we haven't been nervous before because LLMs couldn't reason, we should be nervous now as they definitely can speak, in real-time and in different voices and languages!

There's a certain "wow" factor to voice UI that doesn't exist in the chatbot realm. To have a humanlike voice interact with you in real-time, armed with context, memory, and tools, suddenly opens up a new realm of possibilities. It's time to think beyond IVRs and phone trees as LLM-powered free-form conversations come to be.

The Three Pillars: Accuracy, Cost, and Latency

What does this mean exactly? There are three constraints that have made Voice agents challenging (same as text-based chatbots, with levels cranked up higher): accuracy » cost » latency. Let's break them down:

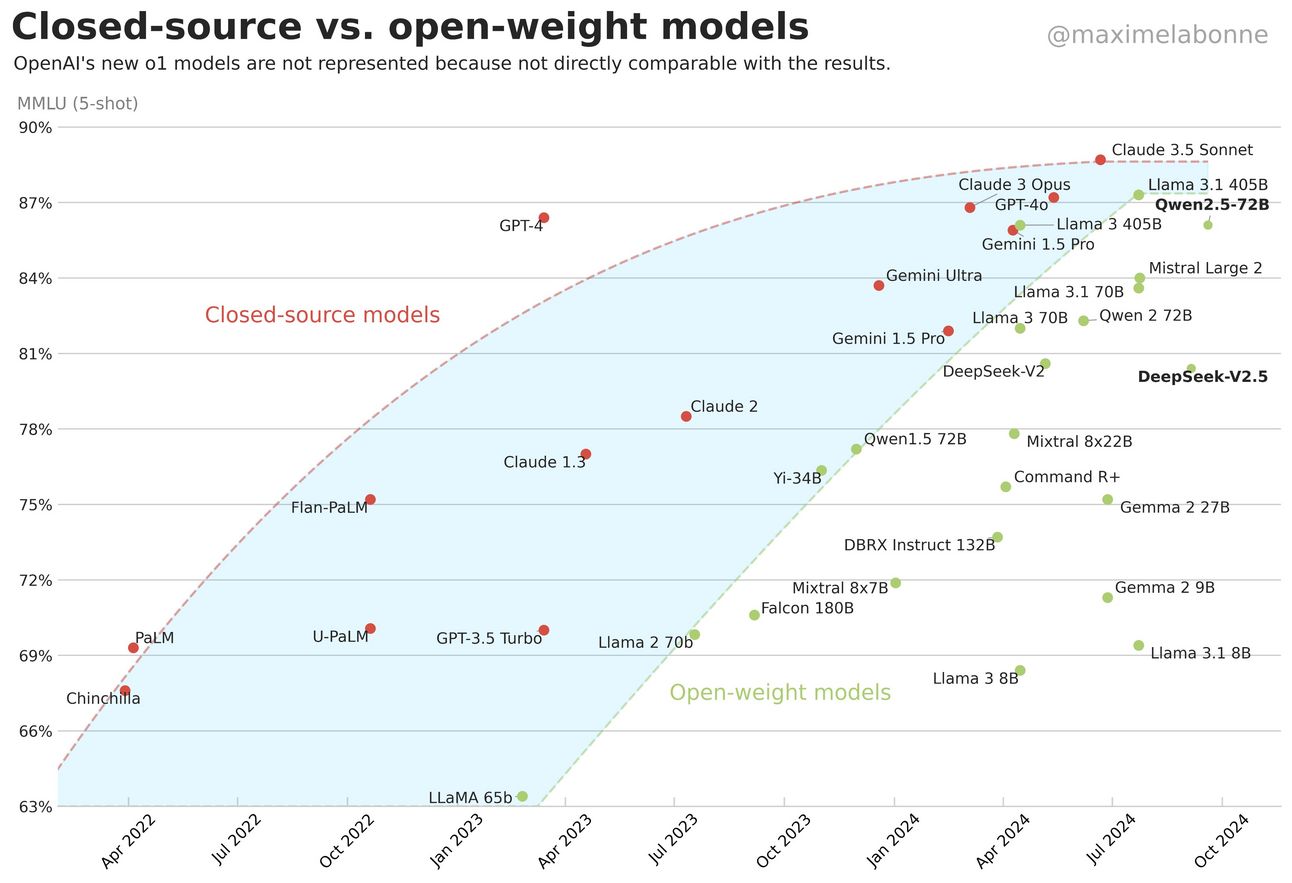

Accuracy: With the latest foundation models boasting 1M+ context windows (think Gemini 1.5 pro), in-context learning has boosted accuracy significantly. This means AI can understand and respond to complex, nuanced conversations more effectively. Claude 3.5 Sonnet, GPT-4o, and now o1-preview continue to push the frontier on performance (o1 now introducing inference-time scaling, abating fears around stagnation in LLM capabilities).

If you're wondering why o1 is missing from the above graph, in Maxime's words: "because these models have built-in CoT reasoning and maybe other stuff that makes the comparison unfair."

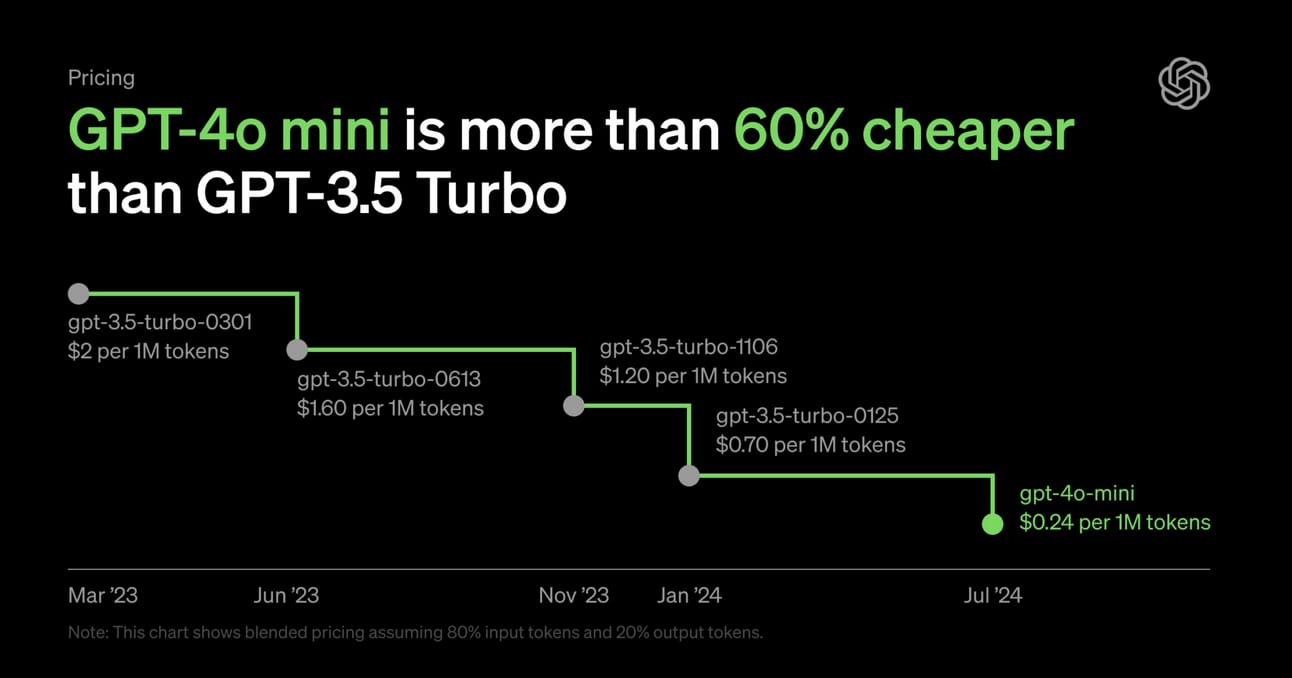

Cost: This increase in performance should have driven up costs (larger prompts = more tokens, right?), and yet we've seen costs for foundation models fall off a cliff. For reference, GPT-4o now costs a blended rate of $4/M tokens (assuming 80% input and 20% output tokens), while GPT-4 cost $36/M tokens at release in March 2023. That's an ~80% drop in price per million tokens. GPT-4o Mini makes the drop in pricing laughable at $0.26/M tokens blended pricing, which is a 99% drop!

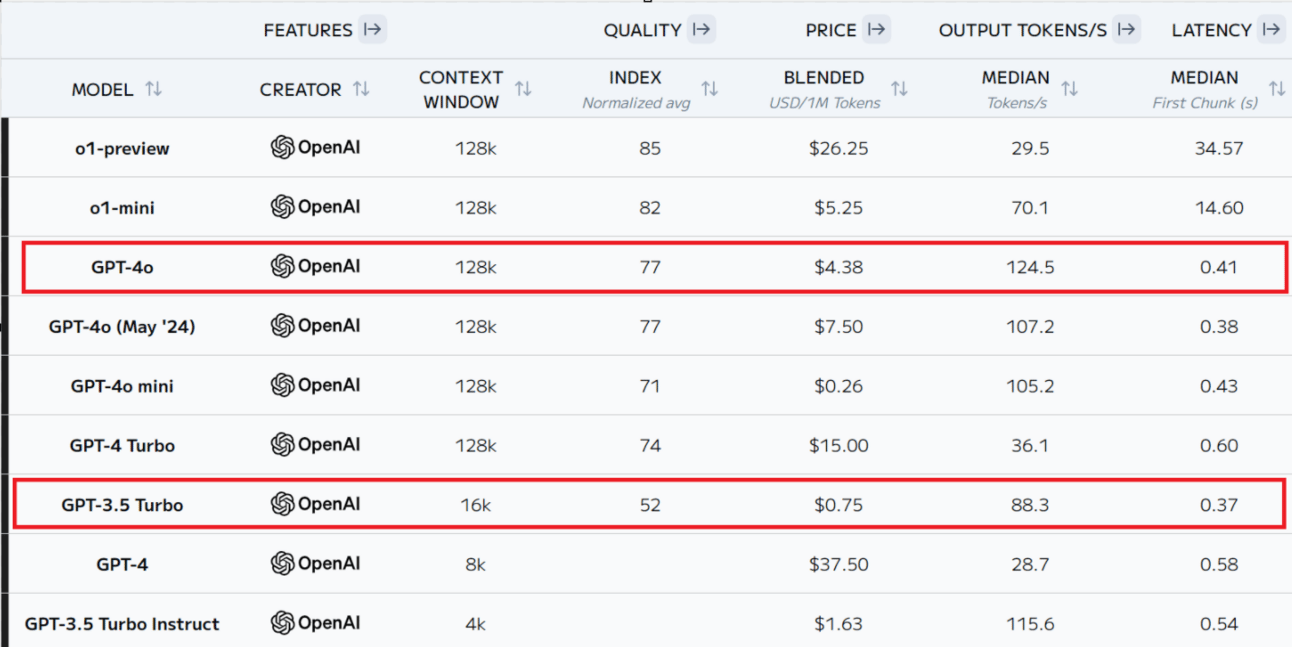

Latency: Surely the latency metrics must be horrendous then! After all, AI deployments are supposed to be a tricky balance of accuracy, cost, and latency! Accuracy up, costs down, so latency has to be up, right? I would in fact argue that latency has been the single biggest barrier to AI voice applications...until today!

The average latency for GPT-3.5 was 2.8 seconds, for GPT-4 it was 5.4 seconds. GPT-4o has an average latency of just 0.32 sec. The average human response time? — 0.21 seconds!

Let that sink in - we've reduced latency by 9x since the GPT-3.5 era and are not much slower than an average human. Wow!

To bring it all together, here's a slide from OpenAI's Dev Day that well illustrates how to think through the various techniques for better, faster, and cheaper apps in production. To note, there were interesting announcements around prompt caching, model distillation, and evals on the dev day as well, so make sure to check those out too.

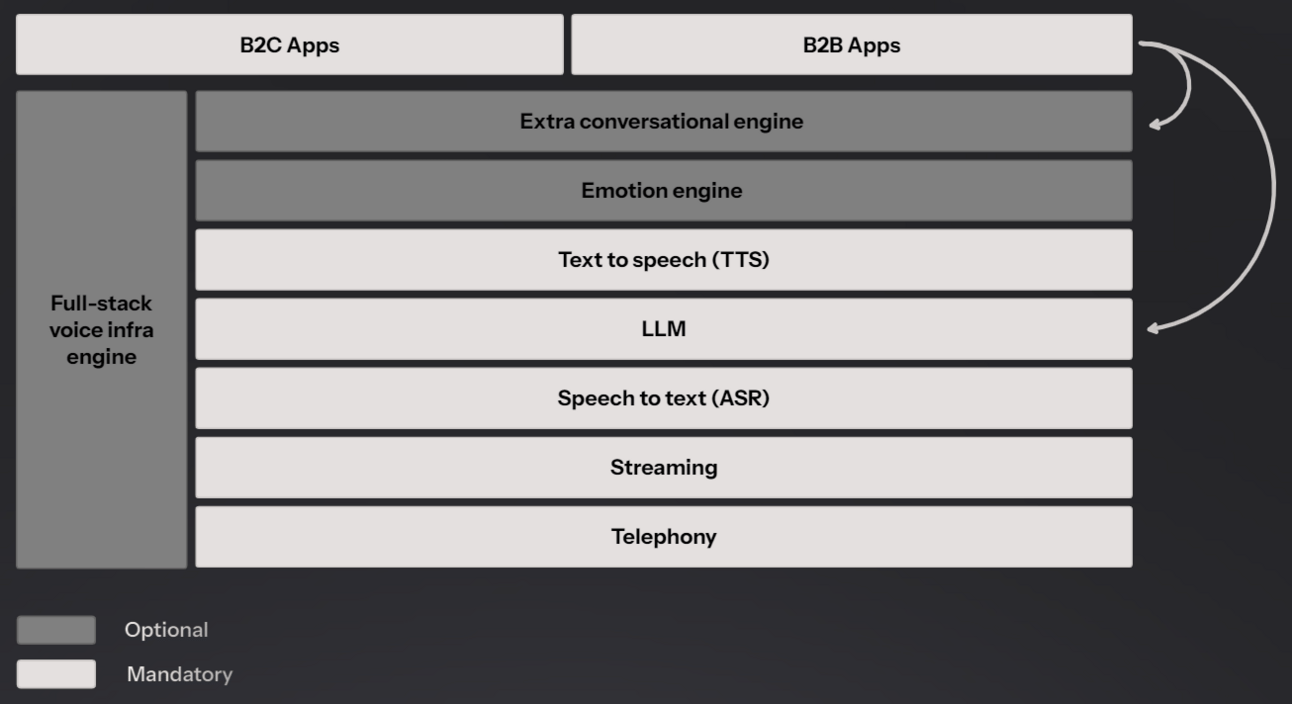

Voice AI Architecture

With that context around progression in LLM capabilities over the last 2 years, let's look at voice agents. The LLM-based voice agents of today work on the following full-stack infrastructure. You could add emotion engines such as Hume or an extra conversational engine on top of your stack for output, but the basic tenets are the same. Let's work by example: you can use a provider such as Twilio for the communication API, stream using Livekit, do speech to text (ASR) using OpenAI's Whisper, use a foundation model with low latency such as GPT-4o for text to text, followed by Text to Speech (TTS) using ElevenLabs and voila - you have your AI voice agent.

Here is an example of such a voice agent in action, created by yours truly:

Now, there are nuances here, things like function calling, context window, costs, etc., for an optimal user experience, but again the one thing you'll notice is the latency that can creep in, given the number of steps involved. While bad for some use cases, it may actually be desirable in others.

For example, if you're creating an AI podcast, you don't really need to worry about latency. What you really need to consider is the model driving the speech, the in-context learning, and ability to deliver a nuanced conversation. In fact, for this use case, you may even increase latency by incorporating preprocessing reruns, because the quality of output matters so much more than how quickly it's delivered. Google's NotebookLM does exactly that in my opinion. It's by far the best AI UX from Google's stable, powered by Gemini 1.5 pro, capable of multimodal RAG (Retrieval-Augmented Generation), with a TTS model which can only be described as best-in-class!

OpenAI's Game-Changing Announcement

But all this changed yesterday, with OpenAI coming out all guns blazing in the AI Voice arena! The launch of real-time API streaming both text and audio in, you guessed it, real-time means speech-to-speech applications no longer need to stitch together the various steps as we discussed above. One API call and you have ChatGPT's advanced voice in your app!

Exciting, so what's the catch? Ummm, the cost... maybe?

While touted on a per-minute basis, and equating to ~$9 per hour, it needs to replace a job to be effective. The scary part is, given how good it is, I think it will! But before I move on, did you notice something in the text snippet I shared above? It carries a nugget that seems to have been missed in all the speech-to-speech excitement. Let me highlight it for you:

Yes, their standard chat completions API will now do audio! Not like OpenAI to hide something cool such as that.

Now that we've uncovered that, let's look at some other details of this real-time API, starting with tool calling. Nothing can explain it better than demos, so here is one for tool calling with voice:

And here is one to showcase how ubiquitous AI voice agents are going to be:

The Future of Voice AI

Does this mean the end of all the startups in the real-time voice, conversational AI, call center bots arena? I don't think so. In fact, I think we're going to see a lot more companies entering this space. The competition will be fierce, and it will be a race to the bottom on pricing. I also believe most enterprises will build their voice agents using OpenAI's real-time API, so who you're serving and in what capacity will matter most!

If you're shaking your head saying "open source and private data," the following stat has had me question how many companies are willing to sacrifice quality and speed of execution to maintain privacy:

"Industry adoption of open source models is <5% and has gone DOWN in the last 6 months"

Add to that the fact that OpenAI just raised $6.6bn in funding at a $157bn valuation!

A good thought-provoking place to end this blog, don't you think?

The potential applications for voice AI are vast - from customer service and education to healthcare and entertainment. As we stand on the brink of this voice based future, it is an exciting time for innovators and entrepreneurs to explore new possibilities.

Go build that voice agent - whether it's a virtual assistant, an AI-powered language tutor, or something entirely new. The frontier is wide open, and the tools are more accessible than ever.

Until next time, Happy reading (and speaking)!