Some call it AI’s “Sputnik Moment”, some the “Jevons Paradox”. But what it isn’t is insignificant. Opensource was being slowly written off, with the closed source frontier models apparently pulling away. Enter DeepSeek!

In what was a classic example of the Streisand effect, everyone freaking out meant the DeepSeek app went rocketing to the top of the app store, taking over from drumroll OpenAI. More GPUs is “NOT” all you need?

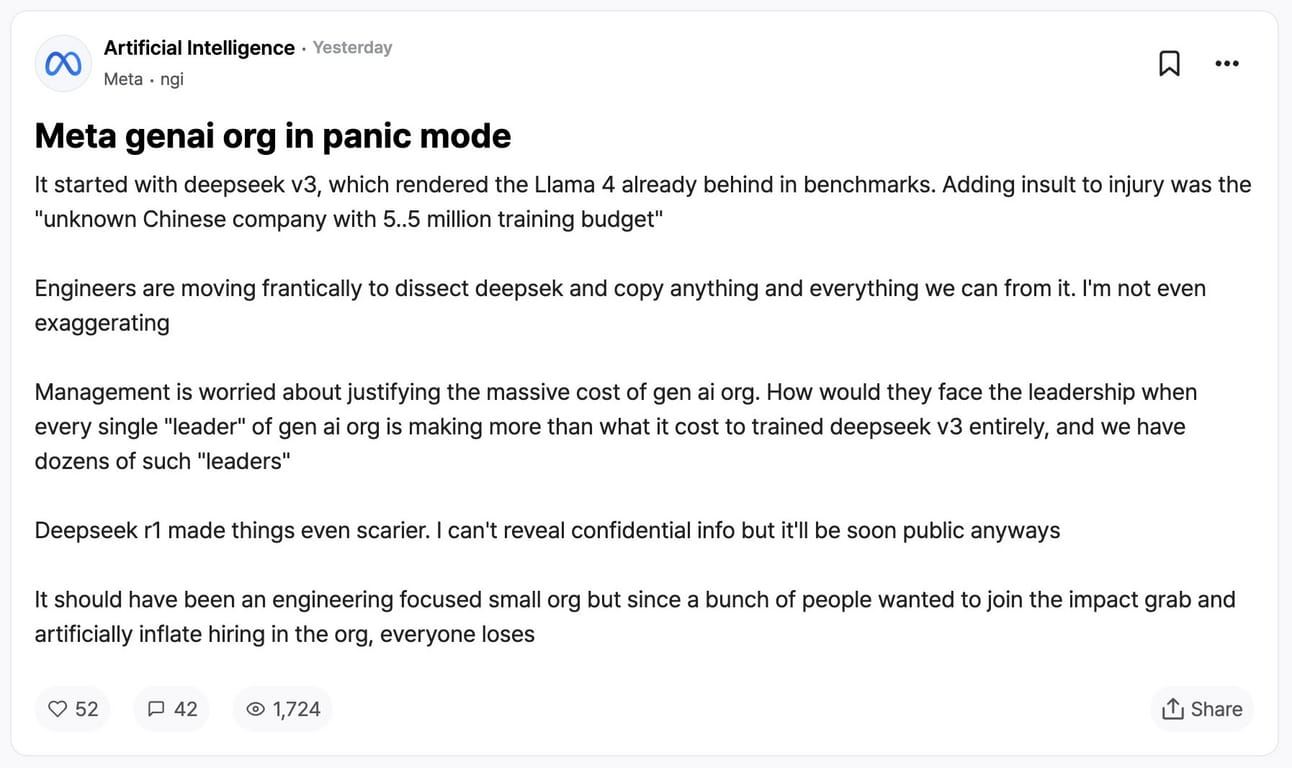

which led to panic stations across the big AI Labs (Meta being picked on here, but it wasn’t the only one):

So, what is DeepSeek and why so much fuss about it?

In what is supposedly a side-project of the one the prominent Chinese quant hedge funds - High-flyer. DeepSeek started as Fire-flyer, a deep learning branch of the fund. In what can only be described as a research project with no expectations of financial return, this is how it came to be:

Founder Liang WenFeng: Launches High-Flyer @ age 30, makes his $$

Starts buying GPUs in 2021, pitches a cluster of 10,000 GPUs to train models: no one takes him seriously

Build exceptional Infra team at High-flyer, launches DeepSeek in 2023 with a goal to develop Human-level AI

Take a bunch of cracked engineers from top Universities in China, pays them the highest salaries in China, singular focus on R&D.

Forced to use the inferior H800s given the export ban on H100 NVIDIA chips, till the H800s were banned too.

Necessity drives innovation, use a mere 2048 H800s to train the 671bn params model, claiming 3% of what costs OpenAI or Meta to train their models.

Launches DeepSeek v3, R1 and open sources everything, publishes technical paper, flexing on details that make Meta’s Llama capex wasteful.

Not everyone believes this number though. Scale AI’s CEO Alexandr Wang claimed they have 50,000 H100, the number probably from below tweet, both unverified.

The Big Launch:

DeepSeek v3 was launched on Christmas Day, after we had already had a busy Dec given OpenAI’s Shipmas and Google’s resurgence via flurry of updates (Gemini 2.0 Flash Thinking, Imagen 3, Veo2 etc). DeepSeek R1 was mentioned as the “reasoning” model it was trained from but was unreleased then. That changed last week, when not only did they release R1-Zero (their base model), R1 (comparable to o1 incorporating cold start before RL) and “six dense models distilled from DeepSeek-R1 based on Llama and Qwen.” The catch? All these were fully open sourced and accompanied by a technical paper, in hope of assuaging fears around they used GPT-4 to train their model. And they did so in ***checks notes*** $5.5M? No, wait a minute that was for the final run…or was it? That number is still under doubt, but still orders of magnitudes lower than US based AI Labs. To put in perspective: Meta is reportedly using a cluster of 100,000 H100 for training the still to be released Llama 4, while DeepSeek used 2048 H800s. Inference is not comparable either:

- o1 pricing: $15 input / $60 output per million tokens

- Deepseek R1 pricing: $0.55 input / $2.19 output per million tokens

Believe what you may, the truth is that both compute and inference just got a lot cheaper!

How does R1 work? Is it the same as ChatGPT?

It is like OpenAI’s o1 but not like its GPT-4o.

Let me explain. It “thinks” (sometimes for way longer than you or I would) before answering any query.

Reasoning:

This is based on the Chain of Thought (CoT) we have discussed in our previous posts (Fast vs Slow Thinking). This is very similar to how OpenAI’s o1 responds, although the reasoning traces (the thinking part) are not available to the end-user, “the moat”. DeepSeek’s reasoning is there for all to see: “There is no moat”.

This is what the thinking looks like":

CoT is great for complex tasks which benefit from step by step reasoning by breaking the larger task down into smaller chunks. One can argue that reinforces (pun intended) Reinforcement Learning (RL) as the training vehicle of 2025, as it enables the “thinking” and “learning” aspects so crucial to reasoning models, R1 being on of them. Here is how R1 was trained:

Staying on “reasoning”, we should take a moment to reflect on why there is so much focus on reasoning based models in 2025, from OpenAI to DeepSeek. The clear vision for these AI Labs is achieving Artificial “General” Intelligence (AGI) and reasoning is perfect for tasks such as coding, mathematics and hence shatters benchmarks on those. What is surprising is that R1 is outperforming peers (such as GPT-4o, o1, Sonnet 3.5) even on benchmarks that claim “Broad Subject Coverage” such as the newly launched Humanity’s Last Exam (HLE), scoring best on both accuracy and calibration error.

This is exciting as it shows how reasoning could be the path to general intelligence and R1 has just put everyone on that path full throttle (as if things weren’t moving fast enough).

Well captured in swyx’s Pareto frontier revisited, we can see how DeepSeek has pushed the efficiency frontier once again (Google being the only one with its outsized free tier and pricing). To answer your question, yes price wars are here and the Consumer wins!

The Technical Details

How did DeepSeek do this? The technical details are well explained in their paper which is a worthwhile read. Breadcrumbs below

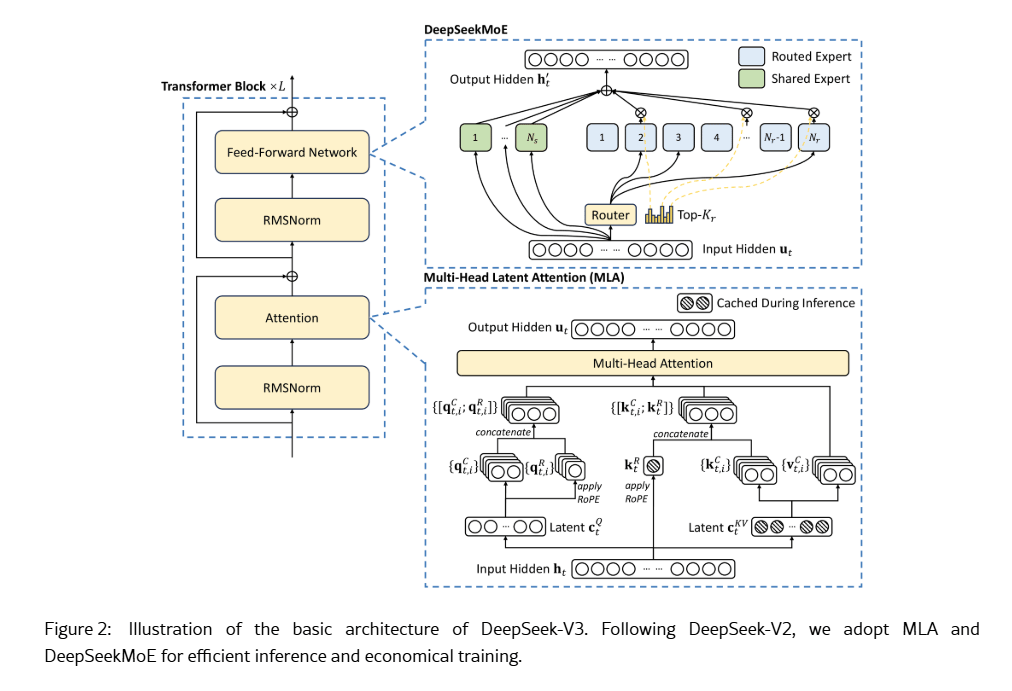

Multi-Head Latent Attention (MLA) and Mixture of Experts (MoE): MLA minimizes memory usage while maintaining performance by compressing KV store. If that wasn’t a big enough breakthrough, they took the MoE architecture and scaled it efficiently by differentiating between specialized and general experts: fine-tuning —> pruning —> healing!

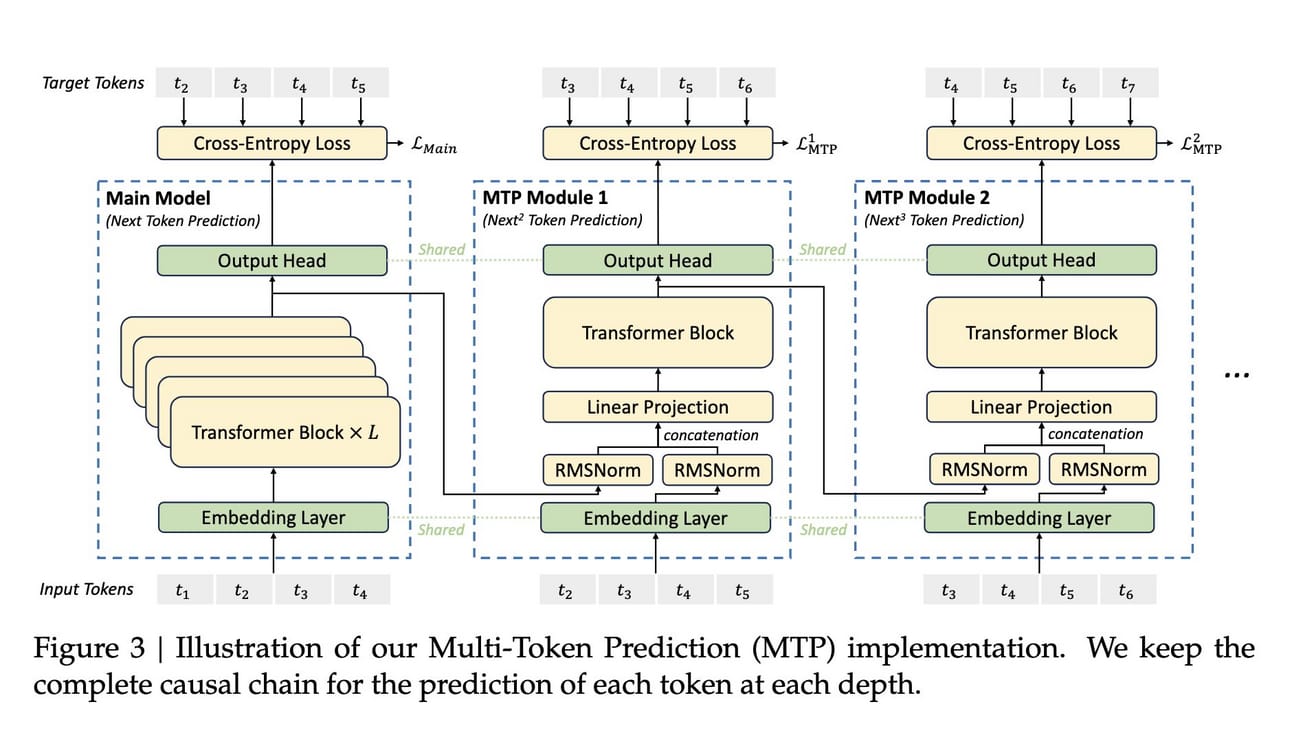

Multi-token prediction: Not new as we already have seen this in the Meta paper earlier, but interesting to see it work so well. It works well for large model sizes, with gains especially pronounced for tasks such as coding, by looking ahead and preplanning future tokens.

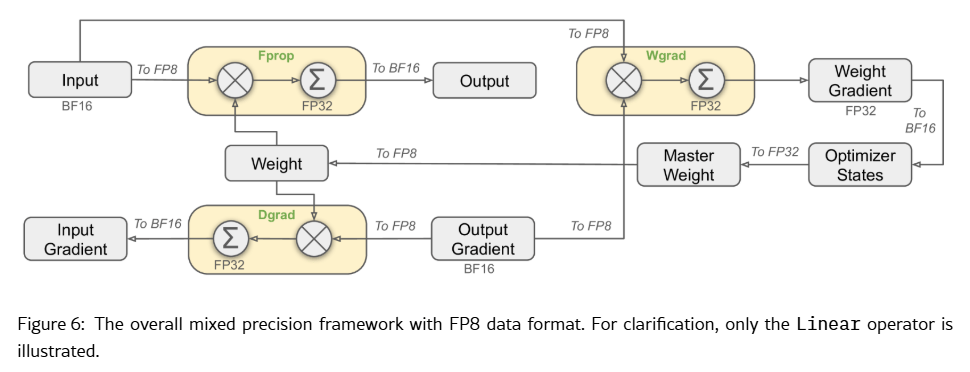

FP-8 mixed precision training: Driven by lack of access to NVIDIA GPUs, we have the more efficient gift from DeepSeek: reminiscent of Noam Shazeer’s (Character AI) post last year, driving memory efficiency and stateful caching

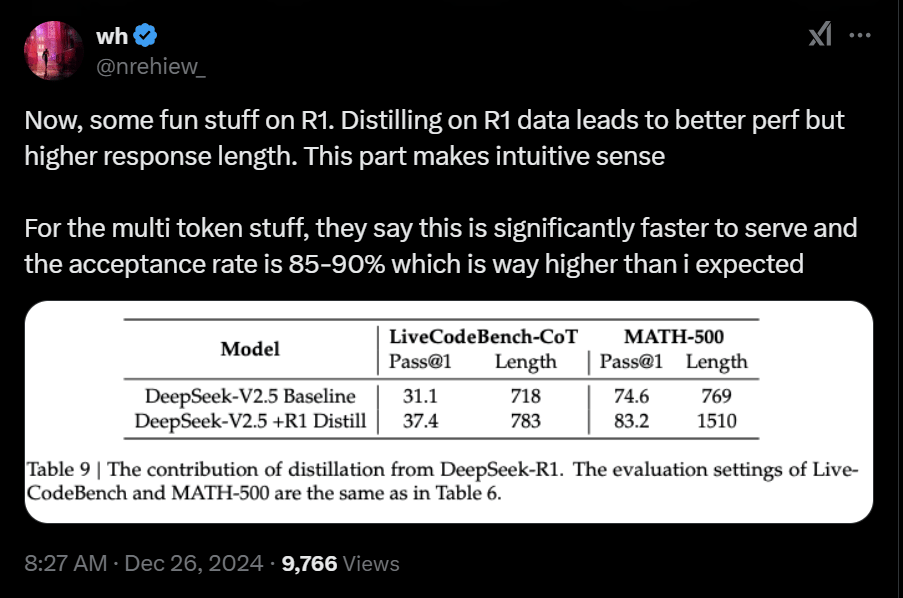

Post training: Distilling from R1 + using reward models showed remarkable efficiency, which further shows why o1 and upcoming o3 will continue to not show the reasoning traces. Leaving you with this from their paper: “DeepSeek-R1-Distill-Qwen-1.5B outperforms GPT-4o and Claude-3.5-Sonnet on math benchmarks with 28.9% on AIME and 83.9% on MATH”

Mind..blown!

Yes! Read that paper. Now, what that implies has the academic diaspora split in half. We have had reactions from everyone at this point, from the White House to the Stock Market. It is after all soft power: LLMs and chips.

And everyone wants this power. Hence, ensues the race, both in terms of funding (following the $500bn Stargate launch incl. Oracle, OpenAI and SoftBank)….

…and in terms of hardware. Chips are the new gold. Speculation around DeepSeek using Huawei’s 910c for inference is already abound, but not confirmed!

What does it mean for Stonks? (ofcourse this is not financial advice)

Here is Ben Thompson with his latest:

The winners: AMZN (distributor via AWS), Apple (Consumer via Hardware, gives them a SOTA model), Meta (Consumer via Software - drives down costs).

Google the loser: TPUs become less valuable

NVDA: Core moats of CUDA and combining multiple chips for 1 large GPU challenged, although if DeepSeek could use H100s they would. On the flipside more efficiency could lead to faster, greater adoption - more for everyone, not zero sum!

LLM providers: Anthropic the big loser, question marks on the API business being the best route. Perplexity serving R1 and immediately scoring on UX is an example of how API first companies may need to quickly start thinking about consumer products, like OpenAI which is quickly becoming into a Consumer tech company.

What can we see next?

We are going to see a rapid acceleration in AI products. The race to the bottom on pricing intelligence means the action shifts to the application layer. More apps, faster automation, rapid adoption, imminent disruption.

At the foundation models level, the warning shots have been fired. This is now a multipronged race to AGI, and the world is watching. Get ready for an action packed 2025!

Until next time…Happy reading!