“2024 is the year we see AI in production” is a widely held view today in the AI community. What is also widely accepted is that prompt engineering continues to be crucial in getting an LLM to plan and execute. There is a fantastic blog by Hamel Hussain on how instead of adding complexity by using some frameworks, it may just make sense to peek at their final generated prompts, because prompt is all that matters!

Prompt Engineering has had its evolution with quite a few novel approaches to get the LLM to comply, from begging to return JSON to threatening with “or my grandma will die”. But our focus today are the ones that have proven effective in getting the LLMs to reason vs just answer, thereby leading to significant improvement in results.

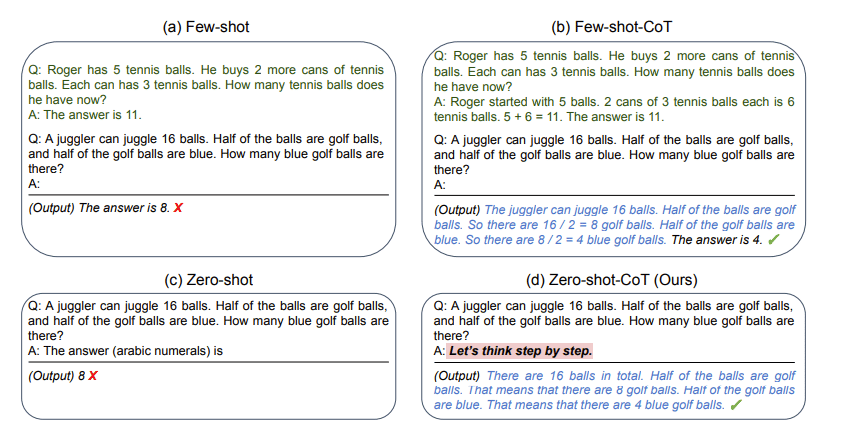

Chain of Thought (CoT): From the paper titled “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models” by Wei at al, CoT is has shown to greatly improve the response quality as it makes the LLM pause and break down complex arithmetic, commonsense or symbolic reasoning tasks into multiple steps.

Zero shot Chain of Thought: A simple addition to the prompt encouraging the LLM to “Let’s think step-by-step”, achieves score gains from 17.7% to 78.7% on MultiArith and from 10.4% to 40.7% on GSM8K vs a zero shot prompt. That’s how effective prompt engineering can be, if done right!

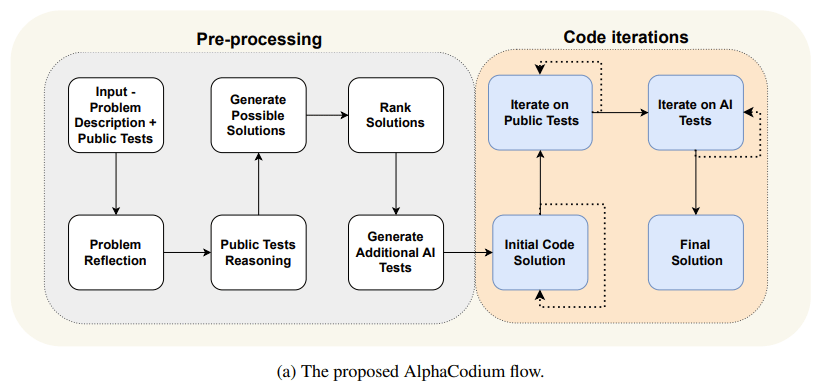

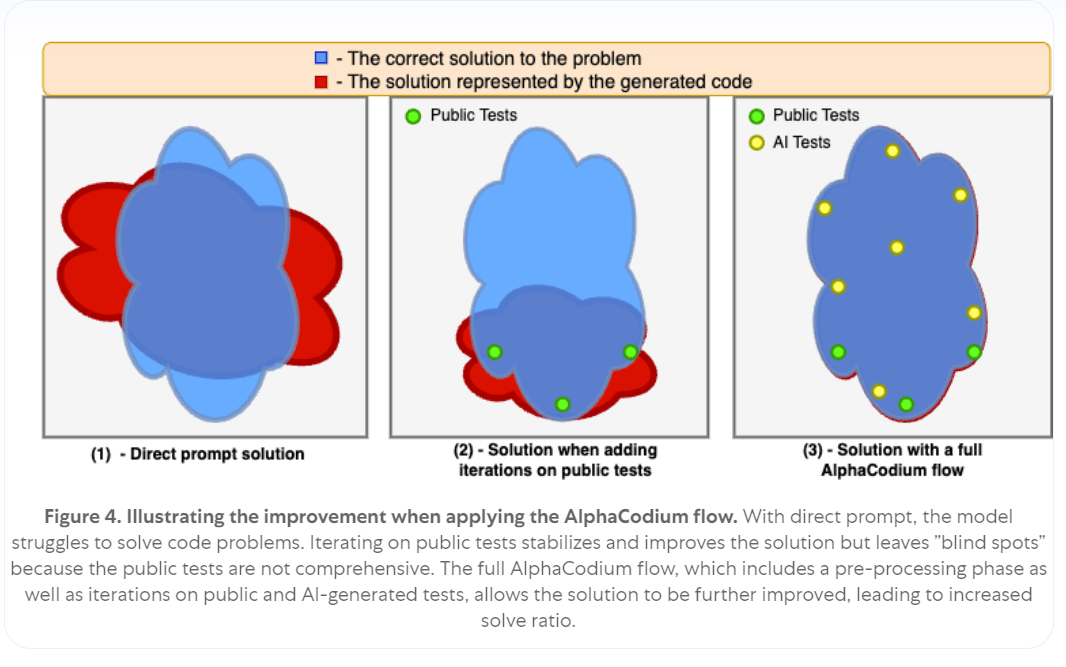

With that as the backdrop, out came the AlphaCodium paper by Itamar Friedman et al earlier this year, introducing the concept of “flow” vs “direct prompt” to improve performance, ie the shift from prompt engineering to “flow engineering”.

What does this mean exactly? Here’s from the authors themselves: “The proposed flow, is divided into two main phases: a pre-processing phase where we reason about the problem in natural language, and an iterative code generation phase where we generate, run, and fix a code solution against public and AI-generated tests.”

The process is comprised of the following steps:

problem reflection: goals, inputs, outputs, constraints etc

public tests reasoning: understanding why an input leads to a certain output

generate and rank possible solutions: two or three possible solutions ranked by correctness, simplicity and robustness

generate additional AI tests that are not part of the original public tests to create a more diverse set

choose a solution, execute, run on some tests, iterate until the solution passes all tests

As shown above, using this iterative process to construct an answer can lead to significantly better results, even if the multi turn flow implies less deterministic outputs. There was an excellent webinar recently organized by Langchain with Itamar Friedman to go through this in detail, linked below for your reference (also the reminder that triggered this post).

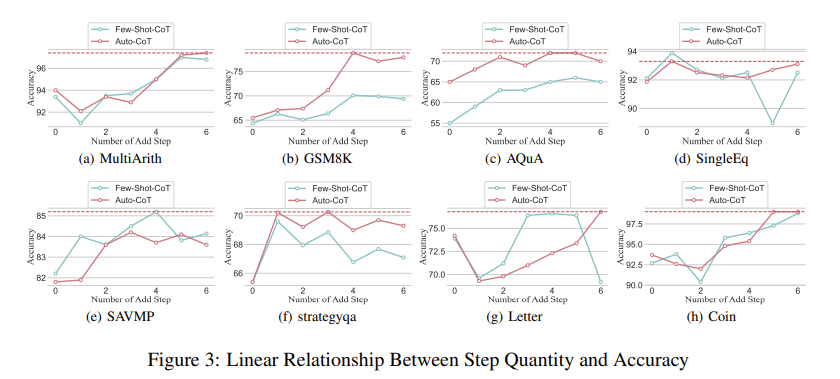

So, add more steps and it should become even better? That is exactly what the paper titled “The Impact of Reasoning Step Length on Large Language Models” by Du et al showed, further supporting the case for constructing the answer iteratively vs a direct prompt (System 2 vs System 1 thinking).

Results from these papers how great promise, but what about actual applications? We already have examples of how “flow engineering” based workflows can be powerful enough to reduce the time spent on prompt engineering and shift the focus to planning and design. The following is a good example of how to design a flow to improve the prompt generation, which was in-turn inspired by Alex Albert’s thread on X.

or something like the following by Lance Martin at Langchain to improve a code-gen’s performance by 50% (self-reported).

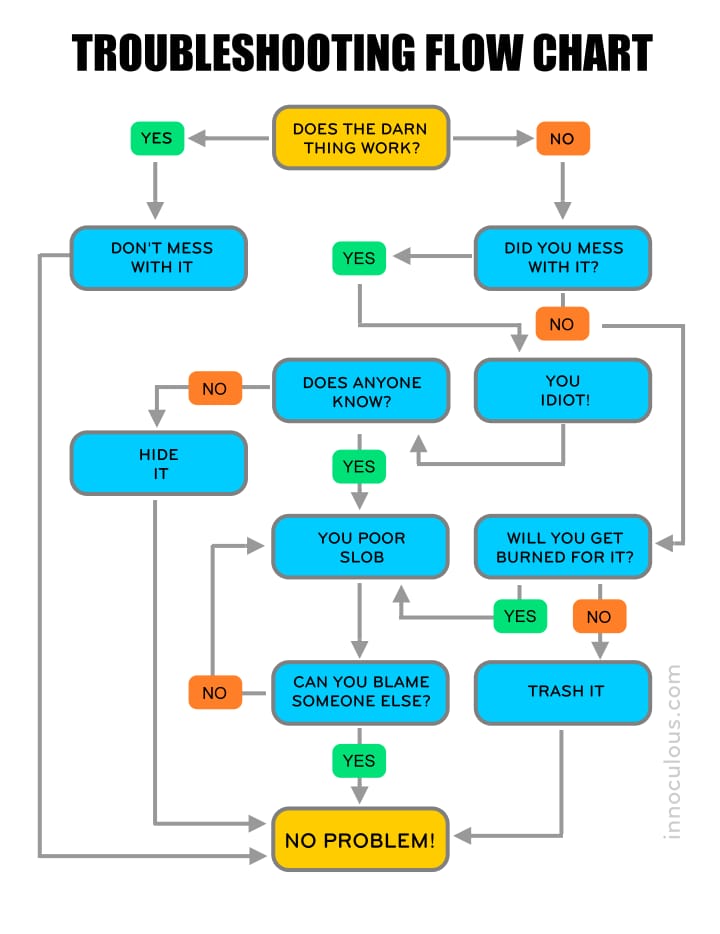

So, multi-step/multi turn processes with multiple prompts can lead to better performance and hence, dare I say it, automation? That was the promise of AutoGPT with set it (the goal) and forget it (the process)! The problem with that is there is very little control over intermediate steps and thereby the process, leading to issues such as getting stuck in a loop, below being a nice visualization of the architecture - notice the open-ended nature of the flow!

Hence started the race for controllable, iterative Agents to automate workflows for a seamless user experience and repeatable, accurate results. There are a few frameworks to approach this, such as CrewAI (multi-agent automations), Autogen (multi-agent conversation framework), SGLang (structured generation language), DSPy (framework for algorithmically optimizing LM prompts and weights) etc.

For brevity, we shall just focus on Langgraph (library for building stateful, multi-actor applications with LLMs) for this post, to put flow engineering in context. Let’s take something we have already covered earlier in our “Advanced RAG Series”- Self-RAG. Implementing it using Langgraph engineers a flow that looks something like this:

Langgraph incorporates the concept of LLM State Machines, which enables flexibility with specific decision points (transition in state) and loops (for iteration). This self reflection via decision points and iteration, leads to much superior results by filtering and correcting poor quality retrievals or generations. Combining Active RAG workflows with flow engineering can be extremely powerful for both control (process) and accuracy (result)!

Flow Engineering is still a field in its infancy, especially when it comes to applications in a production setting. Therein lies the opportunity, to re-imagine how a user interacts with such a flow and what may be the best UI for it.

Agentic workflows in production require laser sharp focus on the process and not just the result. Additionally, where and when to bring the Human-in-the-loop is a question every engineer should think about, as they design these flows.

Until next time…Happy reading!