I’d say who would have guessed, but we have Polymarket for that:

Ofcourse March isn’t over yet! After a period of smaller, incremental releases, the AI world just had one of those days where everyone's timeline exploded with big announcements. Both Google and OpenAI decided to drop major updates on the same day (coincidence? I think not!).

Google's Gemini 2.5 and OpenAI's image generation capabilities in GPT 4o - both are very important releases in their own way and hence deserve the long form write-up. Let’s dive in:

Google's Gemini 2.5: Finally, A "Thinking Model" That Delivers

"Think you know Gemini? 🤔 Think again." Google has been absolutely killing it of late, with their Gemini models consistently redefining the pareto frontier. Gemini 2.5 only seems to have pushed that even further.

The preliminary vibe tests are more than promising and with that ever expanding context window, it is time to rethink your pipeline…again!

Breaking Benchmark Records (for real this time!)

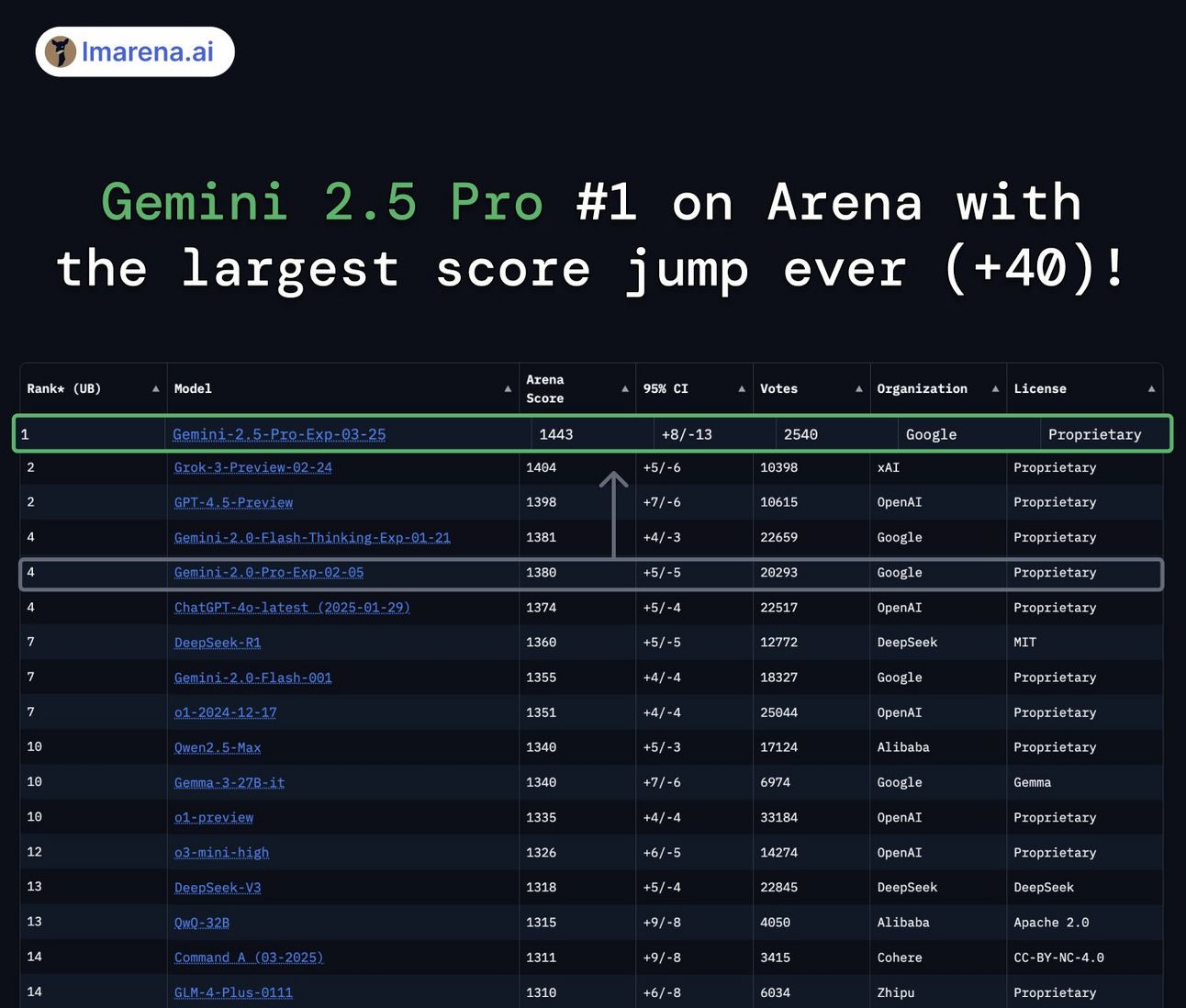

The receipts are in, and they're impressive. Gemini 2.5 Pro (tested under codename "nebula") achieved the largest score jump ever recorded on LMArena – a whopping 40-point increase over previous frontrunners Grok-3 and GPT-4.5.

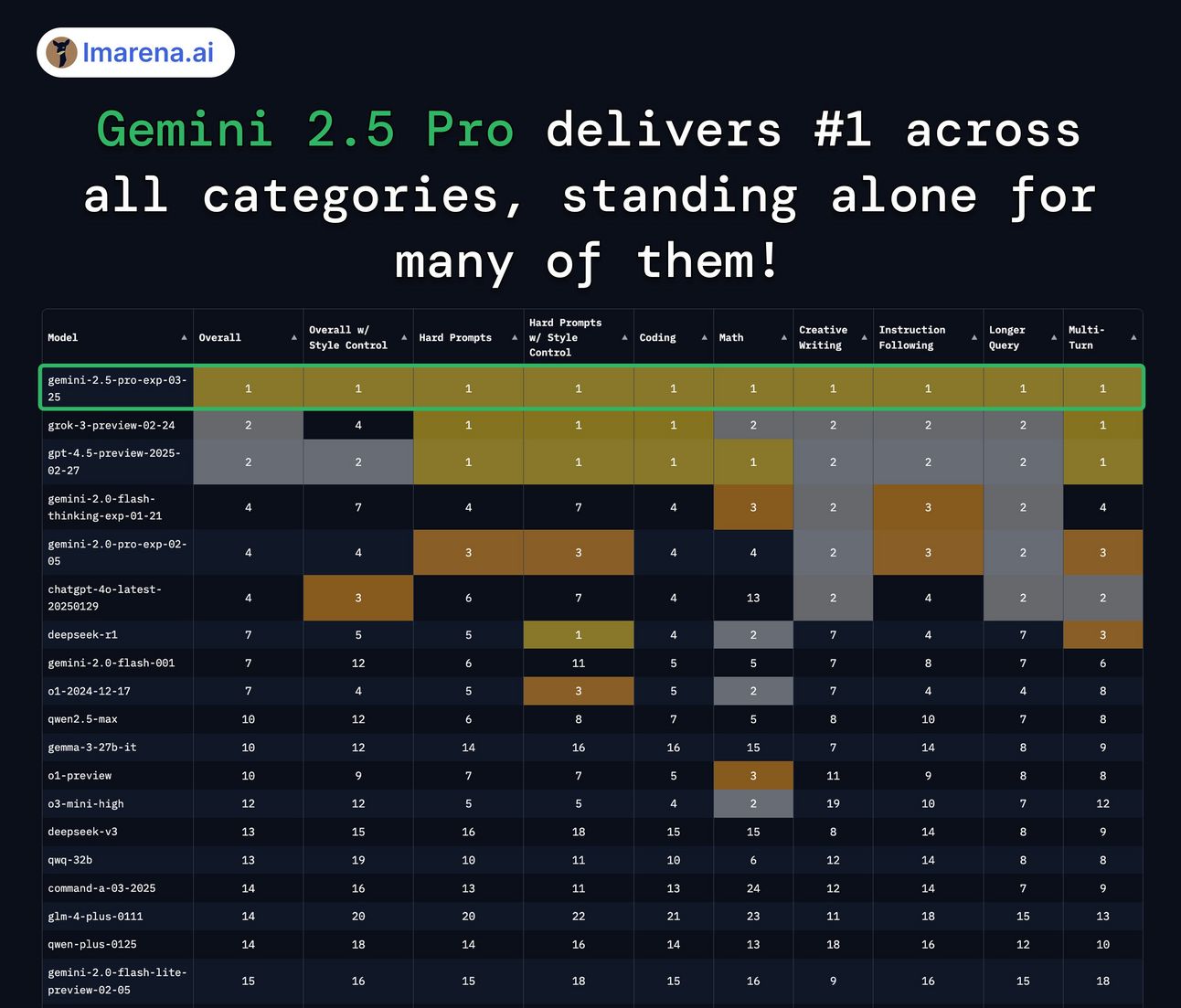

What’s interesting is that the model didn't just excel in one category - it ranked #1 across ALL evaluation categories:

Math? Check.

Creative Writing? Yep.

Instruction Following? You bet.

Longer Query handling? Absolutely.

Multi-Turn conversations? That too.

It tied with Grok-3/GPT-4.5 for Hard Prompts and Coding, but edged ahead elsewhere. Have I got your attention yet?

Beyond Just Another Benchmark

Sure, we've all seen models that ace a specific benchmark but fall apart in the real world and I try my best to stay off hyperbole, but Gemini 2.5 seems to be showing broader capabilities:

#1 on the Vision Arena leaderboard

#2 on WebDev Arena (first Gemini model to match Claude 3.5 Sonnet)

First model to score over 50% on Poetry Bench (with ChatGPT 4.5 at 42% and Grok 3 at 34%)

73% on the Aider polyglot leaderboard for multi-language programming

Something particularly noteworthy for developers – this is "the first Gemini model to effectively use efficient diff-like editing formats" for code. iykyk!

What Makes It Different? The "Thinking" Architecture

So what's the secret sauce here? Google is calling Gemini 2.5 a "thinking model." Unlike the usual approach of generating tokens sequentially, it's designed to reason through problems before responding.

Here’s something to think about, thanks to Nathan: the model's reasoning trains include simulated Google search capabilities. This suggests it was built with advanced research applications in mind, potentially setting the stage for something like Deep Research functionality.

According to GDM, they achieved this by combining an improved base model with enhanced post-training techniques. In normal-person speak: they made it think more carefully before it speaks. Revolutionary concept, I know!

Real-World Testing (Because Benchmarks Aren't Everything)

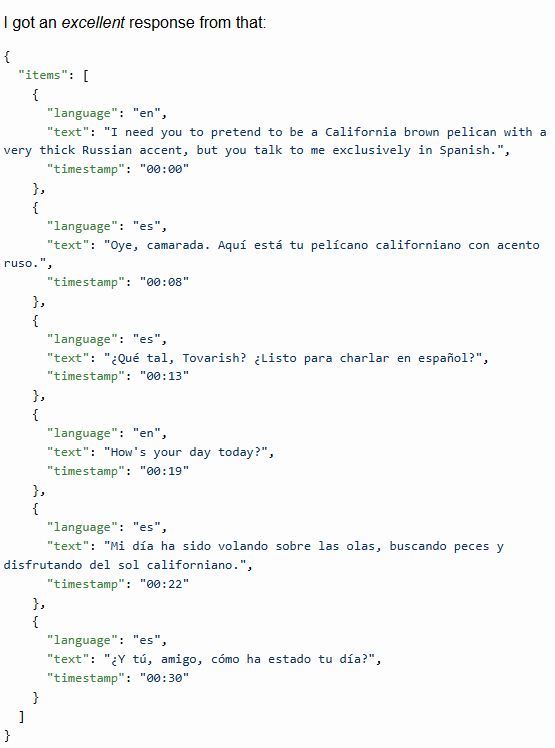

Simon Willison has already put Gemini 2.5 Pro through its paces with his LLM command-line tool, and some capabilities stand out:

It created a surprisingly good SVG of a pelican riding a bicycle (which is harder than it sounds…apparently!)

Its audio transcription accurately handled mixed English/Spanish with precise timestamps

It precisely detected multiple pelicans in an image while correctly excluding similar birds

It can now generate responses up to 64,000 tokens (vs previous 8,192)

Add in the million-token input window (with 2 million coming soon) and a knowledge cutoff updated to January 2025, and you've got some serious technical improvements.

When Can You Try It?

You can get your hands on it now through Google AI Studio and the Gemini app (if you're a Gemini Advanced user). Enterprise users will need to wait for the Vertex AI implementation. “Available as experimental and free for now” , pricing details are still TBD.

OpenAI's GPT 4o Image Generation: The native Image generation update we've been waiting for

Pretty impressive stuff from OpenAI, with the update to their image generation capability and a big step up from Dall-E (finally!).

Given the big announcement from Reve day before yesterday…

…and the already well received Google’s native image generation…

…it wouldn’t count as unexpected, but very significant nonetheless, given how good it is. Sama says: "Today we have one of the most fun, cool things we have ever launched. People have been waiting for this for a long time."

And he might be right.

This Isn't Your Dad's DALL-E

According to the GPT-4o System Card, this is "a new, significantly more capable image generation approach than our earlier DALL·E 3 series of models." Also confirmed is that this is an autoregressive model.

The key capabilities include:

Photorealistic outputs that don't look AI-generated

Taking images as inputs and transforming them

Following detailed instructions with text rendering that actually works

Using the model's knowledge to create nuanced, contextually appropriate images.

The livestream showed off examples like transforming photos into anime, creating educational manga explaining relativity (complete with humor), designing detailed trading cards, and generating memes. Still hard to believe how quickly we are progressing on the AI timeline!

Don’t believe me? Trust the AI overlords:

Or maybe the influencoors?

The Tech Behind the Magic

What makes 4o's image generation different is how deeply it's integrated into the model's architecture. It can follow complex instructions, detailed visual layouts, generate text and do various styles!

Technical observers like @gallabytes noticed it uses a "multi-scale generation setup" that "commits to low frequency at the beginning then decodes high frequency with patch AR." In normal-person speak: it gets the general layout right first, then fills in the details. That's why images start blurry before sharpening.

In terms of clues around its inner working, all we have are generated images (appropriately so?), “with pros and cons” - how very Glass Onion!

Well those of you wondering beyond “to whom the credit is due”, here is the prompt for the above:

“““A wide image taken with a phone of a glass whiteboard, in a room overlooking the Bay Bridge. The field of view shows a woman writing, sporting a tshirt wiith a large OpenAI logo. The handwriting looks natural and a bit messy, and we see the photographer's reflection.

The text reads:

(left)

"Transfer between Modalities:

Suppose we directly model

p(text, pixels, sound) [equation]

with one big autoregressive transformer.

Pros:

* image generation augmented with vast world knowledge

* next-level text rendering

* native in-context learning

* unified post-training stack

Cons:

* varying bit-rate across modalities

* compute not adaptive"

(Right)

"Fixes:

* model compressed representations

* compose autoregressive prior with a powerful decoder"

On the bottom right of the board, she draws a diagram:

"tokens -> [transformer] -> [diffusion] -> pixels"“““

People Are Already Finding Real Uses For This

What's fascinating is how quickly practical applications are emerging:

Home Design Visualization: How about some ideas for interior decoration or imagine renovation projects?

Educational Visuals That Don't Suck: How about an illustrated "Periodic Table of the Elements" which doesn’t put you to sleep? Problem solved.

UI Design Without the Design Skills: Want to brainstorm UI mockups, or get inspired from one? No problemo…

Visualize anything: 4o seems to be amazing at creating Infographics and now with clear text insertion, it has never been more powerful.

As for comparison:

Why People Are Freaking Out About This

Taking cure from Dan Shipper, Let’s try and break down the various impressive capabilities enabled by the upgrade:

It can actually handle text: This has been the Achilles’ heel for most image generators. Frankly, it is quite magical to see the coherence in images, when I have become so used to having it randomly generated for so long!

Precision editing that works: Modifying images is a breeze…and accurate. No more regenerating the entire image 20 times hoping for the right result.

Style matching that doesn't fall apart: It is able to reference provided images and run with it. This is huge for maintaining visual consistency.

When Can You Use It?

Image generation in ChatGPT is already rolling out to Plus and Enterprise users, with free tier access coming soon. API access will follow for developers.

What Does This All Mean?

These simultaneous releases represent significant steps in AI's practical evolution. The capabilities we're seeing - whether reasoning-focused or visually creative - point to a common trajectory: AI systems becoming more coherent in how they process and generate different types of information.

For developers building with these systems, this means rethinking integration points and pipelines. The expanded context windows (up to 1-2 million tokens) fundamentally change what's possible in terms of document processing, knowledge management, and conversational depth. Similarly, the ability to generate and edit images within the conversation flow eliminates the need for context-switching between specialized tools.

What a time to be alive, right? Until next time…keep building!