A whirlwind couple of weeks have given us a glimpse of how spoilt for choice we could be with foundation models in the future. This implies we need to start bucketing these “model families” into what they can and cannot do well, whether they are open or closed source and how we can decide which one to use. GPT-4 has been the default SOTA benchmark, until along came Claude Opus! Add to that the mega launch of Llama3 yesterday - yes, open source is alive and competing at the front of the field!

Going in chronological order, let’s start with the first model to challenge (and overthrow) GPT4-Turbo at the time of its release:

Anthropic’s Claude Opus:

Released in mid-March, this was the first model to clearly beat GPT4 on eval benchmarks (imperfect as they may be). Closed source and widely regarded as being better and more nuanced with written text and code, this was the first time once could see a possible future with OpenAI not winning it all. Not only Opus, interestingly, Claude Haiku from the same family beat Mistral Large, GPT-4-0613 while being much cheaper and better than GPT 3.5 - exciting times! As with everything, it has its limitations - the model is not openly licensed and does not share weights or training data. Opus is also more expensive and reportedly slower than GPT4.

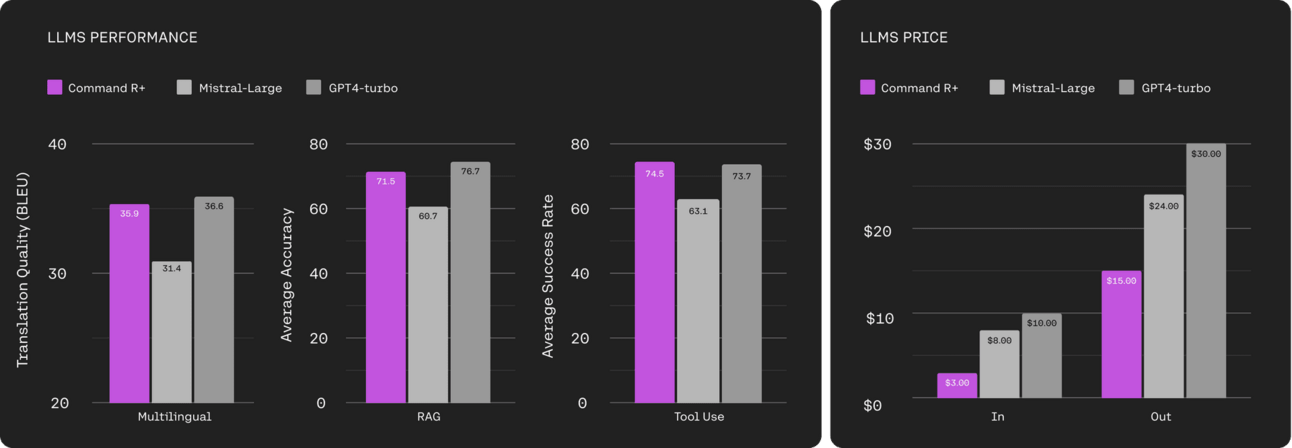

Cohere’s Command R+ (Apr 04):

Non-commercial license but open weight. Cheaper, faster and NOT MoE. Designed for Enterprise use, it is multilingual with Advanced RAG and tool use capabilities. Comparable to GPT-4’s previous best version in performance and well priced @ $3/M input tokens and $15/M output tokens.

Google’s Gemini 1.5 Pro (Apr 09):

Public access via Gemini API (no more waitlists). Upgrades include audio understanding (1M context window implies upto 9.5 hours of audio), unlimited files, better system instructions, JSON mode and improvements to function calling. Notably, there was a paper on linear attention released by Google - Leave No Context Behind to “scale Transformer-based Large Language Models (LLMs) to infinitely long inputs with bounded memory and computation”. May the context window be a thing of the past!

OpenAI’s GPT4 reclaims top spot (Apr 09):

GPT Turbo Vision released along with upgrades which seemed to have significantly improved math and reasoning, to reclaim the throne. This was quite a flex from OpenAI, having topped the leaderboard again with an upgrade vs having had to release GPT-5.

Mistral’s Mixtral 8×22b (Apr 09):

The release immediately made Mixtral the best open-source model on the leaderboard. A sparse MoE model, it is fluent in 5 languages, has 64k context window, is natively capable of function calling, and good at code and math. Initial community evals place it on par with Mistral Medium.

X’s Grok 1.5 Vision (April 12):

Closed source, 128k context, multimodal and comparable to GPT-4V in multimodal capabilities with big improvement in math and reasoning benchmarks as well. While great, it needs to either be a) open-source or b) better than GPT4-Turbo or c) preferably both, to get the attention it may deserve amidst the sea of performant models.

Reka (Apr 15):

Closed source, Multimodal, 128k context window, multilingual (32 languages). Impressively enough, it sits just behind GPT4V on human evals for multimodal, beating Claude Opus. Impressive launch using the 3 Body trailer!

Quite pricey though, jury’s still out on the value for money question as it comes in comparable to Mistral Medium on the leaderboard and behind the much cheaper Mixtral 8×22B! To note though, they use a “modular encoder-decoder transformer architecture”, which lends itself well to building powerful multimodal LLMs. Definitely worth tracking!

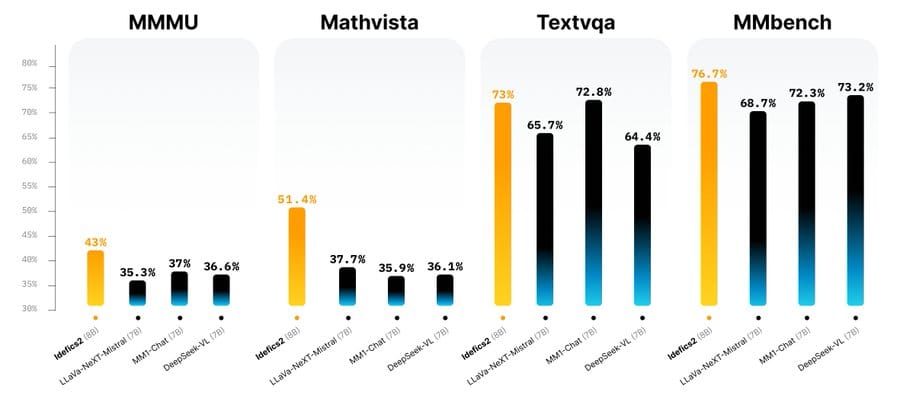

Huggingface’s Idefics2 (Apr 15):

Fully open-source with public datasets, multimodal, 8b parameter Vision-Language model with enhanced OCR capabilities. Performance comparable to DeepSeek-VL, LLaVA-NeXT-Mistral-7B and competitive with 30B models like MM1-Chat. It lends itself well to use cases requiring interleaving image (res upto 980 × 980) and text in the input query.

EleutherAI’s Pile T5 (Apr 15):

Another encoder-decoder model (similar to Reka), but open source and reportedly a great choice for tasks requiring code (trained on the Pile). From the release: “A new version of T5, replacing the pretrained dataset with Pile and switching out the original T5 tokenizer with Llama tokenizer.” It outperforms T5-v1.1 and is comparable to Flan T-5 on MMLU and BigBench Hard.

Microsoft’s WizardLM-2 (April 15):

Boasting of performance comparable to proprietary models such as Claude 3 Sonnet, Microsoft’s open-sourced WizardLM-2 quickly ran up the leaderboard charts to claim the SOTA open source model crown for a short while. It was soon taken down, because they forgot to do…um “toxicity testing”. Still worth it to leave the following here for reference for when they do re-release the model.

Meta’s Llama3 (April 18):

Creating waves, the announcement from Llama3 has the open source community singing Hallelujah! SOTA performance across benchmarks on both pre-trained and instruct, beating both Gemini 1.5 Pro and Claude Sonnet. That with a 400bn parameter under training. They even created a new human eval set with 1800 prompts, beating all similar sized models and already encroaching into GPT4 territory on MMLU evals. This is huge for open source, no doubt. The only critique would be the small context window, but lets not get picky here. Following tweet from Alessio captures all the details quite well.

And today we have it on the lmsys leaderboard - the 70B model already ahead of Command R+ and comparable to Claude Sonnet and Gemini Pro as per initial results. Well done Meta! Looking at you now Google and X - time to go open source?

Taking two steps back, it is worthwhile to remember that OpenAI hasn’t released a formal next model after GPT4 (released March 2023). The following is a good way to summarize where we are with the OpenAI releases and the progression of GPT4 as a buildup to GPT5:

That’s a lot of models in 2 weeks , you may wonder who all are in the arena? Stanford NLP’s latest annual report has got your back, summarizing how ubiquitous these foundation models could become with so many organizations working on multiple models.

So, there we have it! The gap between OSS and proprietary models continues to close. All eyes now on GPT5 - the expectations are immense as the bar has been raised quite high (and an imminent 400B open source model under training). Has open source finally caught up, or will OpenAI do what it does best - raise it higher? Will foundation models end up being a commodity? Is this the beginning of the price war for market share?

All great questions, only time will tell!

Until next time, happy reading!