First things first: MCP = Model Context Protocol.

Some call it the "API moment for AI agents," others the "next evolution of LLM architecture." But what it isn't is insignificant. As AI applications have exploded across industries, the fragmentation of context management has become a massive headache. Enter Anthropic's Model Context Protocol (MCP)!

Great, everyone wants to know about it, but what is it?

The Big Idea: Models Are Only as Good as Their Context

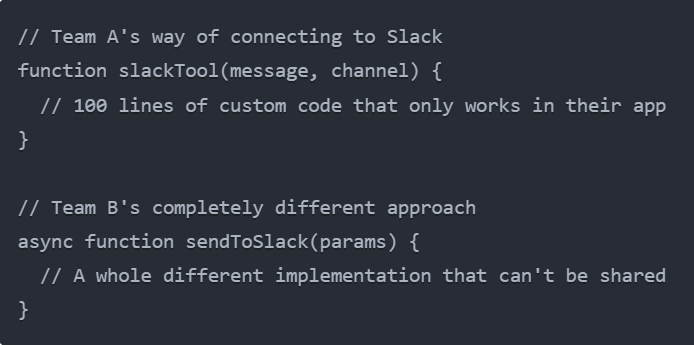

Remember the old days (like, a year ago) when we'd manually copy-paste context into our AI chatbots? Those dark ages are behind us, but we've since created a mess of custom implementations for connecting AI to data sources. Every team building their own custom implementation, with different ways of bringing in tools and data... sound familiar?

MCP's mission is brutally simple: create an open protocol that standardizes how AI apps and agents interact with your tools and data sources. Think of it as what APIs did for web apps, or what Language Server Protocol (LSP) did for IDEs - but now for AI.

The Before & After MCP Glow-Up

BEFORE MCP: 🥴

Each team reinvents the wheel with custom prompts, different tool interfaces, and weird ways of bringing in context. Want your AI to talk to Salesforce? Build it yourself. GitHub? Another custom implementation. Your calendar? Start from scratch again. It's integration hell.

AFTER MCP: ✨

One standard way to define tools, resources, and prompts. Build a Slack MCP server once, and any MCP-compatible client can use it instantly. Claude, Cursor, Goose... they all just work with your tools.

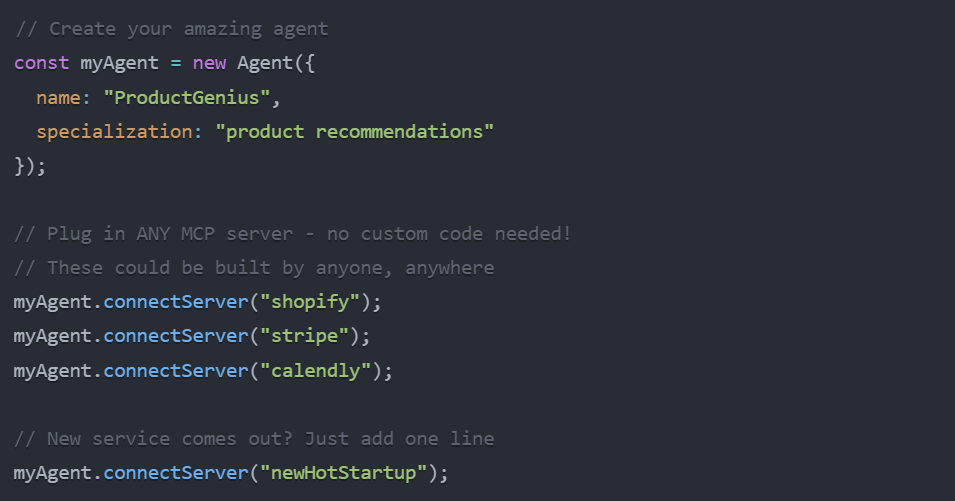

Code for illustration purposes only

The MCP Ecosystem: Clients & Servers

The magic of MCP happens through a client-server architecture that's refreshingly straightforward:

MCP Clients: These are your AI applications that leverage the protocol. Think Anthropic's own apps, Cursor, Windsurf, and agents like Block's Goose.

MCP Servers: These are the wrappers or federators that provide access to various systems - from databases to your local git repository.

Once your client is MCP-compatible, it can connect to ANY server with zero additional work. And if you're a tool or API provider, you build your MCP server once and see adoption everywhere. Goodbye, "N times M problem" of different permutations!

MCP flattens the "N×M problem" - instead of each app needing custom integration with each data source, they all speak one standard protocol.

The Three Pillars of MCP

MCP standardizes interaction through three main interfaces that are like the holy trinity of AI context management. Let's break them down with some real examples:

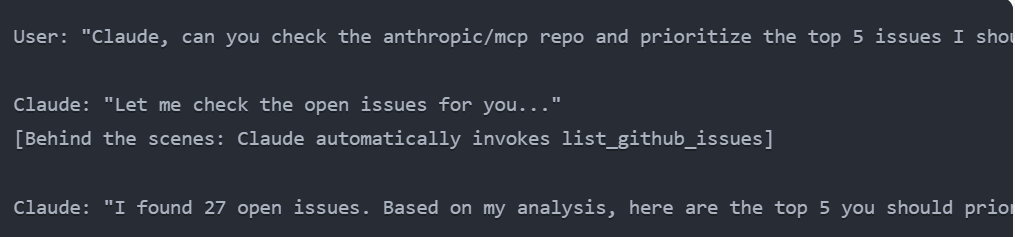

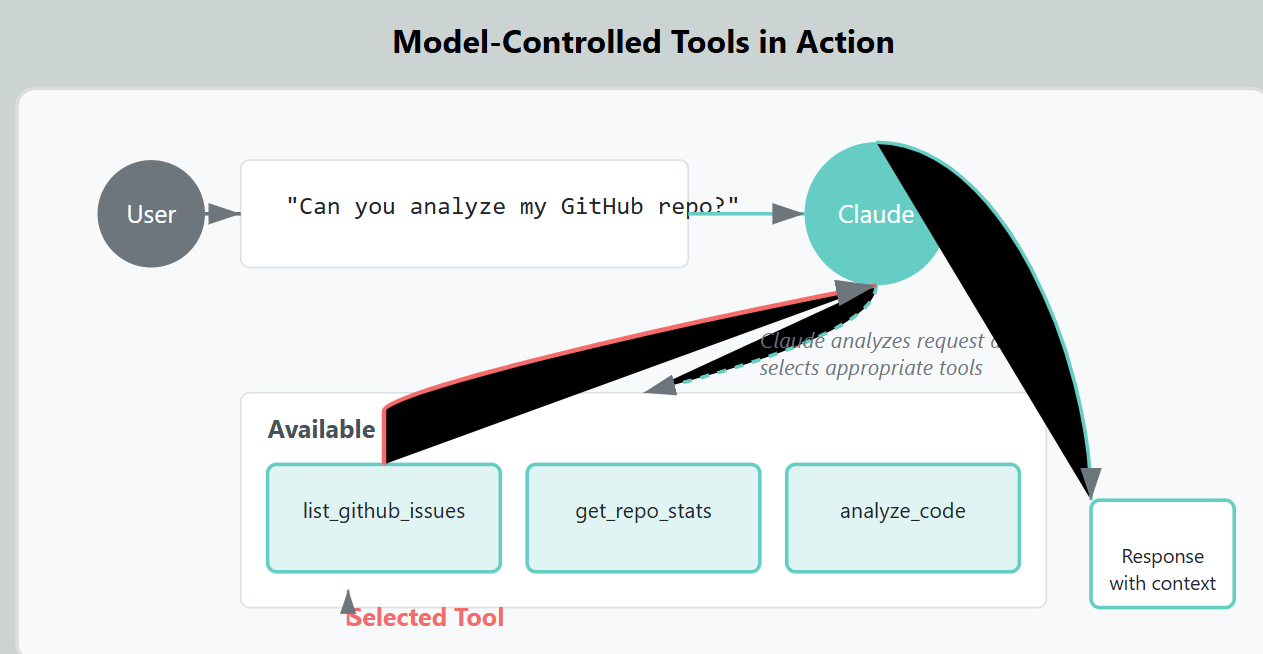

1. Tools 🛠️ (Model-Controlled)

Tools are the functions your AI can call when it needs to. The key part? The AI decides when to use them, not you. The model looks at what you're asking, thinks "I need data from X to answer this," and boom - invokes the right tool.

Code for illustration purposes only

And in action:

No need to explicitly tell the AI to use the tool - it just knows when it needs data.

The model decides when to call tools based on the conversation context

2. Resources 📚 (Application-Controlled)

Resources are data packages that the server exposes to the application. The key difference from tools? The application (not the model) controls when to use resources. They're like attachments or data files that enhance the context.

Code for illustration purposes only

In Claude for desktop, you'll see these as attachments you can click on to send to the model:

Resources showing up as attachments in Claude for desktop

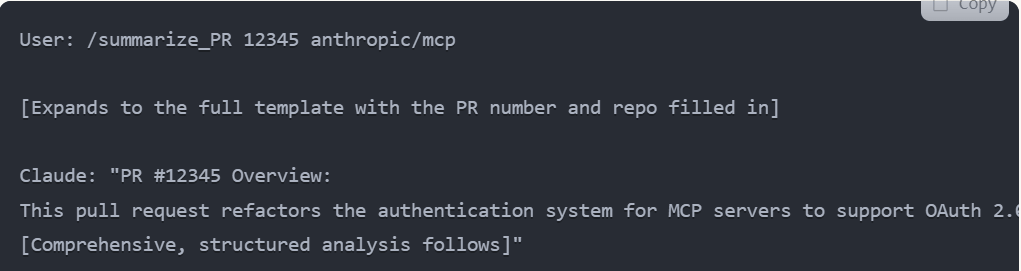

3. Prompts 📝 (User-Controlled)

Prompts are predefined templates that users can trigger. Think of them as productivity shortcuts - why type out a long, complex prompt when you can just use a slash command?

Code for illustration purposes only

And in practice:

This is clutch for teams who want consistent, high-quality interactions with specific formats and frameworks.

Slash commands expanding into powerful custom prompts

The beauty lies in the subtlety: You don't need to understand how all these work under the hood. As a developer, you just connect to MCP servers that handle these interactions, and your app instantly gets superpowers.

Adoption Explosion

Impressive numbers since launch:! and thanks to the multiple AI influencers, they are only accelerating

1,100+ community-built servers

Major applications like Cursor and Windsurf becoming MCP clients

Official integrations from companies for their systems

Active open-source community building the ecosystem

But the real story is how MCP fits into the larger vision for AI agents.

The Agent Revolution: MCP as the Foundation

If you've been following Anthropic's "Building Effective Agents" work, you'll recognize how perfectly MCP slots into the augmented LLM concept. The ideal agent is an LLM enhanced with:

Retrieval systems

Tool invocation capabilities

Memory management

MCP effectively forms the bottom layer that connects these agents to the external world in a standardized way. This creates self-evolving agents that can:

Discover new capabilities on the fly

Expand beyond their initial programming

Focus on their core task while MCP handles all the connecting bits

The Secret Sauce: Sampling & Composability 🔥

What makes MCP particularly powerful for agents are two advanced capabilities that take things to the next level.

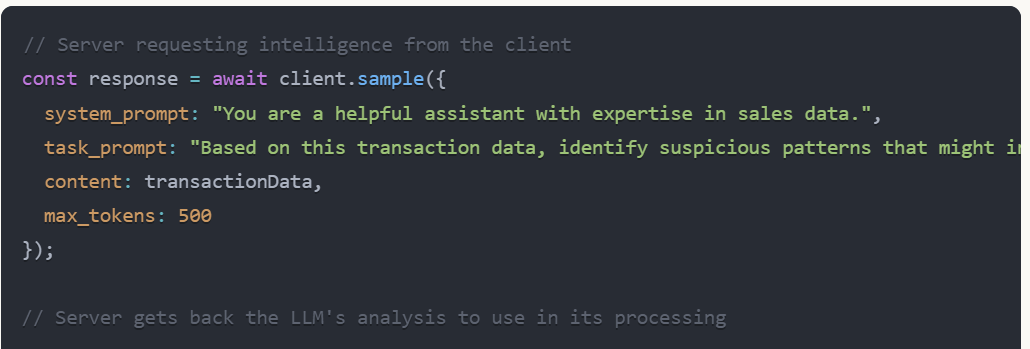

Sampling: Servers Getting Smart 🧠

Sampling is like giving the server a brain. It allows an MCP server to request completions (LLM inference calls) from the client.

Code for illustration purposes only

Why is this so powerful? Imagine a Salesforce MCP server that doesn't just provide raw data but can actually analyze it intelligently. The server says: "I need Claude to look at this data pattern and tell me if it's suspicious" - and requests that intelligence on demand.

What one may find appealing is the control it provides. The client maintains full control over:

Privacy (what data the model sees)

Costs (how many tokens are generated)

Model choice (which model is used)

Sampling allows servers to request AI reasoning while preserving client control

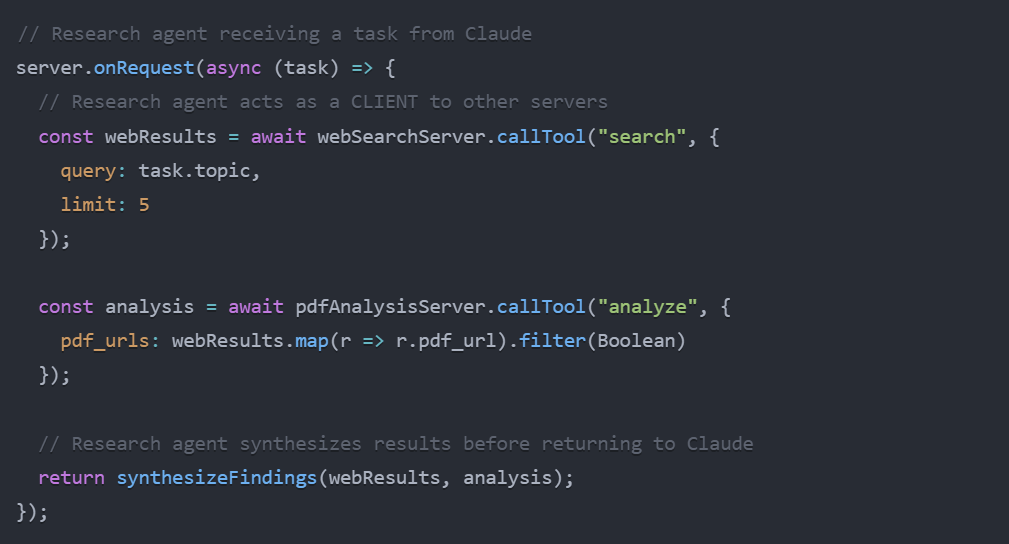

Composability: AI Inception 🤯

This can get a little mind-bending so bear with me: any application can be both an MCP client AND server. Think of it as AI inception - agents within agents within agents.

In practice, this creates hierarchical systems where specialized agents handle different parts of a complex task:

Code for illustration purposes only

Each layer can:

Have its own specialized knowledge

Access different data sources

Make independent decisions

But still respect the client's overall control

The result? Super-complex tasks broken down into manageable pieces, handled by specialized agents, while keeping privacy and control intact.

Agents working together in a hierarchical structure through MCP

For vibe coders: Think of it like microservices but for AI. Each agent does one thing really well, and they all talk to each other through a standard protocol. You don't need to build everything - just connect the right agents together!

What's Coming: The MCP Roadmap 🚀

With the caveat of “this is what we know”, here's what's dropping soon:

Remote Servers & OAuth: Going Public 🌐

MCP has leveled up with OAuth 2.0 support, enabling remotely hosted servers that anyone can access over SSE (Server-Sent Events). This is HUGE.

Code for illustration purposes only

What this means:

Servers can live on public URLs (not just your local machine)

Proper authentication flows with OAuth 2.0

Connect to services like Slack, GitHub, etc. with proper permissions

No more stdio limitations!

OAuth flow allowing secure authentication between client, server, and third-party services

MCP Registry: The App Store for AI Capabilities 📱

A unified metadata service for MCP servers is in development (think npm for MCP servers), solving:

Code for illustration purposes only

The registry will handle:

Server discoverability (find the right server for any task)

Verification (is this the official Slack server or a sketchy one?)

Version tracking (which version of a server are you using?)

Trust and security concerns (who built this? is it trusted?)

This unlocks something amazing: self-evolving agents. Imagine asking your agent to analyze Grafana logs, and it automatically discovers and installs the Grafana MCP server from the registry, even if it never knew about Grafana before! 🤯

MCP Registry concept showing categorized, verified servers

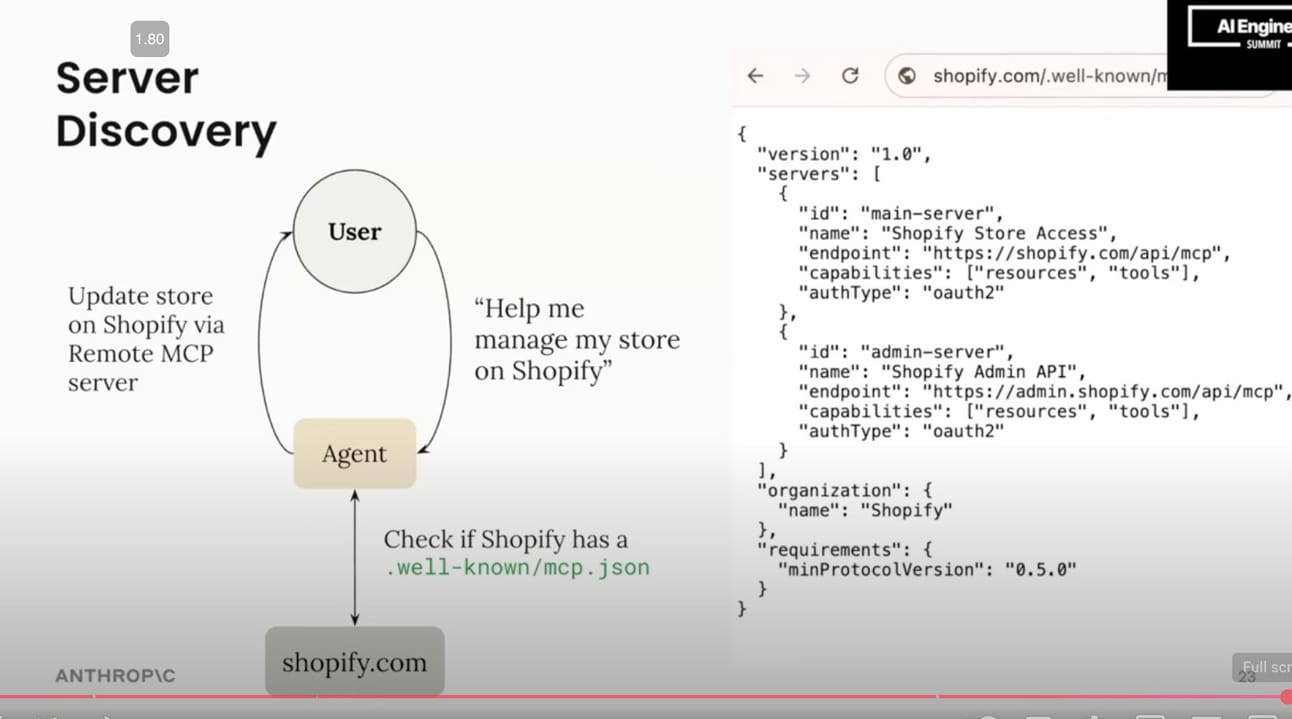

Well-Known MCP

Taking it up another level, similar to the .well-known concept for websites, this would allow any website to expose its MCP capabilities through a standard URL:

Code for illustration purposes only

Now imagine this scenario:

You tell your agent: "Help me manage my store on shopify.com"

Agent checks if shopify.com has a

.well-known/mcp.jsonDiscovers Shopify's official MCP server

Automatically connects and helps you manage your store

Pair this with Anthropic's computer use model (which can navigate UIs by clicking and typing), and you get agents that seamlessly switch between API calls when available and UI navigation when needed!

Agents discovering MCP capabilities directly from websites

This is like when Progressive Web Apps changed everything. Suddenly every website can have a standard way to talk to AI, without building custom integrations for each AI assistant. One standard to rule them all!

What It All Means: The Future of AI Development 🔮

MCP isn't just another dev tool - it's a fundamental shift in how we build AI systems. It's like when REST APIs transformed web development or when containers changed how we deploy apps.

By creating a clean separation between an agent's brain and the data it needs, developers can finally:

Build Once, Run Everywhere ♻️

Code for illustration purposes only

Self-Upgrading AI 📈 Your agents will discover new capabilities on their own:

Specialization Without Isolation 🧩 Build tiny, focused agents that work together through MCP:

The research agent doesn't need to know how to write reports, and the writing agent doesn't need to know how to do research - they just need to speak MCP!

Off the Hype Train: The Current Limitations of MCP 🚧

With all the excitement around MCP, it's worth it to take a step back for a reality check. Like any emerging tech, MCP isn't a perfect solution (yet), and there are several important limitations worth understanding:

1. Still Early Days = Early Problems 👶

MCP is still in its infancy, with the first public release happening just months ago. That means:

Immature Ecosystem: Despite the 1,100+ community servers, many are basic implementations or proofs of concept

Evolving Standards: The protocol is still changing, which can break existing implementations

Documentation Gaps: The docs are improving but still leave many implementation details to developers to figure out

2. Security Concerns 🔒

While MCP has security built in, there are still valid concerns:

Third-Party Servers: How do you verify that a community-built server isn't collecting or misusing data?

Permission Scopes: The current permission model is relatively simple compared to mature OAuth implementations

Attack Surface: Each new server creates another potential entry point for security issues

3. Performance Overhead ⏱️

The abstraction layer comes with costs:

Latency: Going through MCP adds round-trips that can make interactions slower than direct API calls

Serialization Overhead: JSON-RPC serialization/deserialization adds computational overhead

Complex Deployment: Running multiple MCP servers can be resource-intensive, especially for smaller applications

For time-sensitive applications, this overhead can be a real concern.

4. "Not Quite Standard" Yet 📏

Despite the push for standardization:

No Formal Standards Body: MCP isn't recognized by W3C, IETF, or similar standard organizations yet

Anthropic-Driven: While open source, development is still heavily Anthropic-led

Competing Approaches: Other large AI companies aren't fully bought in and may develop competing standards

5. Integration Complexities 🧩

Some practical challenges include:

Server Management: For complex deployments, you need to manage multiple servers with different update cycles

Debugging Difficulties: Tracing issues through layers of MCP can be challenging

Error Handling: Cascading errors across multiple servers can be difficult to diagnose

6. Enterprise Adoption Barriers 🏢

For enterprise use, there are additional hurdles:

Compliance Concerns: Many regulated industries need time to evaluate if MCP meets their governance requirements

Legacy System Integration: Connecting MCP to decades-old enterprise systems isn't straightforward

Organizational Change: Getting different teams to adopt a unified protocol requires significant effort

The Bottom Line: Promising But Not Perfect 💰

While foundation models grab all the headlines, protocols like MCP represent an important piece of the AI puzzle. The battle for AI isn't just about the biggest models - it's about creating ecosystems where these models can thrive.

MCP is positioning itself to become the TCP/IP of the agent ecosystem, but it's not quite there yet.

The hype is justified to some extent, but so is the skepticism. Early adopters will face challenges alongside the benefits. For most developers, the right approach is to experiment with MCP while keeping alternatives in mind.

It is going to be an interesting 2025 as MCP matures and we see which parts of its current roadmap become reality and which parts get reinvented along the way. The protocols might be boring, but that doesn’t make them any less important than the next flashy demo you may come across! So stay on it.

I shall be remiss if I did not call out the workshop by Anthropic’s Mahesh Murag for the multiple aha moments regarding MCP’s capabilities and for the inspiration for this post - a must do.

Until next time...Happy building! 🚀