What a time this is to build. With AI agents now ubiquitous and productivity/workflow enhancement apps having a moment, this is an opportune time to take stock of where we are in the agent landscape. Below is a good way to look at the “AI Agents Stack”.

Before we “delve deeper” (iykyk) on this, we should take a moment to remind ourselves what these agents enable. It is literally your job on the line. A recent survey by OpenAI and UPenn concluded that “about 15% of all worker tasks in the U.S. could be completed significantly faster at the same level of quality. When incorporating software and tooling built on top of LLMs (i.e., Vertical SaaS), this share increases to between 47% and 56% of all tasks.”

Have I got your attention yet?

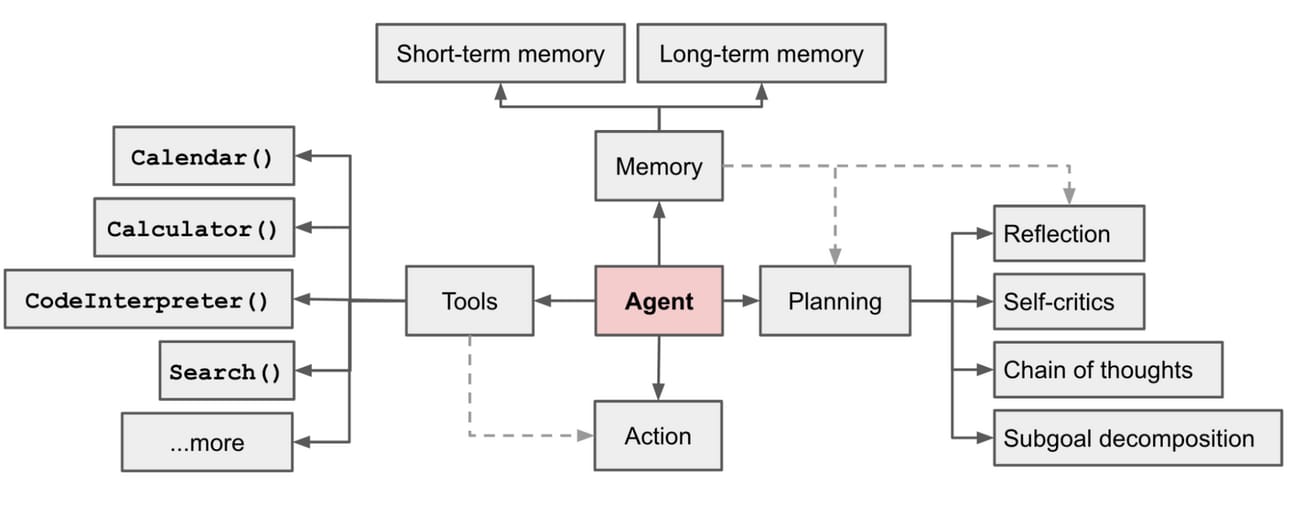

So, let us quickly redefine what agents are. The diagram below has from Lilian Weng’s blog has been referenced a number of times, best imo by swyx - “add a while loop to the below and you have an agent!”.

At the beginning of this year, I had mentioned 2024 was to be the year AI moved from demo to production, from the infra layer to the application layer. This has turned out to be largely true, driven in the latter half by the overwhelming focus on “agentic workflows”.

Let’s start from where the end user interacts with these agents ie the Vertical Agents layer. The vertical agents ie agents that can be designed to specialize in specific tasks (vertical here could be domain or function). For example, Lindy is a no code/low code workflow automation tool that takes small tasks, chains them, adds triggers, makes them iterative and voila, you have an agent. Think: calendar event started (trigger)—> if virtual meeting exists —> enable record meeting —> transcribe and summarize —> send email recap—> send user notes —> slack DM meeting summary —> answer follow up questions.

In the same vein, Perplexity runs every query through reasoning agents to arrive at final results, Replit’s coding agent plans, reasons and executes code, Harvey specializes in the legal domain to help with tasks such as drafting and case management and so on - you get the idea. In fact, YC believes Vertical AI Agents can be 10x bigger than SAAS. More here:

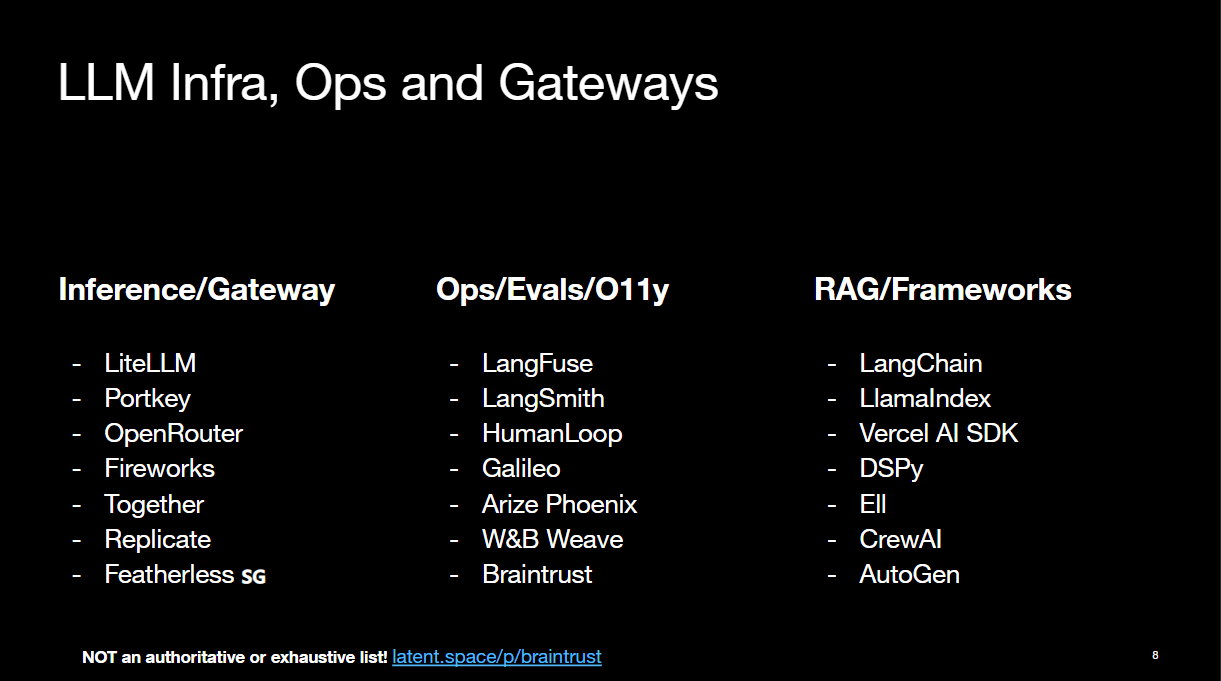

This brings us to Hosting/Serving, Obervability and Frameworks. Something that can be bucketed under Infra, Ops and Gateways. The boundaries can be blurred on these, as proven by Langchain’s next act ie Langgraph. The Inference layer here is interesting as there is a lot of time and money spent in trying to have the option of multiple LLMs available to AI agents, which leads to unnecessary complications. Best to use specialized routers for this flexibility as shown below.

On the evals side, your AI stack defines your eval provider largely. If you were using Langchain, Langsmith should be a straightforward addition to the stack, while you may want to consider alternatives if not. Tough to benchmark agents for full versatility at the moment, although things such as SWE-Bench for code works, WebVoyager for browser automation are still good indicators. expect that to change as agents become more prevalent into 2025.

This brings us to frameworks. We discussed these in detail in my earlier post on multi-agent collaboration frameworks back in May. While the concept has remained the same, what has changed is the number of such frameworks that exist now. If you are thinking which one is the best, the classic answer is, it depends (see above for vertical AI agents). Autogen, CrewAI and Langgraph have established themselves as the ones leading this piece of the pie.

The tricky part to this is how to think about these frameworks in light of LLM providers now training LLMs as agents. Google’s Gemini Flash 2.0 for example has been launched promising the “agentic experience” across codegen, deep research ie internet search, tool calling ie integration with the Google suite of products in addition to multimodality (image, text, audio and video interleaved).

We already have OpenAI’s o1 (now out of preview) pushing boundaries with how LLMs can “reason”, an extension fo the CoT line of research. With them also launching their vision + voice mode yesterday, multimodality with reasoning & tool use will be the space to watch into 2025.

Next comes Memory and Tool Use. This was a big unsolved piece of the puzzle as we entered 2024. I wouldn’t say it is solved yet, but we are seeing glimpses of getting there. Langgraph’s state machine is one form of it as it needs to persist through the flow. Langmem was Langchain’s offering for the memory layer (now rolled into Langgraph) which does enable long term memory and now semantic search over that memory.

OpenAI was one of the first to release it, whereby we have long term memory to drive personalization back in Feb 2024, but we haven’t yet driven home the “AI knows me” piece yet.

The next stage will be hyper personalization whereby these LLMs will remember everything about the user: contextual awareness and long term memory driving their one-to-one interactions with individuals across time and mental space (ie consolidating threads)! We can see it in Google’s Gemini 2.0 release as well. My prediction? 2025 will see hyper personalization!

This is where tool use shines. Once the LLM has context and is personalized to you, it can integrate quite well with our day to day interactions with all kinds of software. From browsers to google maps. With multimodality already capable enough, realtime audio streaming almost will feel like sci-fi, as we interact with our AI assistant (think smart glasses or other form of wearables). Here is the launch video showing what it could look like:

This brings us back to productivity and automation. Computer Use has been a big topic after Anthropic’s release earlier (Note to self to do a write-up on all the latest releases, there have been so many!) as one form of browser automation. With vision now released by both OpenAI and Google, it will only be matter of time when we shall be sharing our screens and chatting with our assistants to navigate workflows.

Graham said it best in his prez at the LS Neurips: We need to revaluate and redesign our existing systems and processes. You are a web application and don’t have an api? think again!

Digital transformation has a new meaning going into 2025 and while agents are already here, whatever your definition of them may be, what remains is their more obvious integration into our day to day. The race to AGI is on, and traditional SAAS/legacy tech needs to rethink where they stand wrt this new Agent stack.

Exciting times indeed! Until next time…