This is the second post on the series inspired by LlamaIndex’s recent tutorial series on Agents.

In my previous post on Planning, we looked at how to guide LLMs towards System 2 thinking. This is in contrast to their default System 1 like probabilistic token generation with a goal to please the user rather than being factually accurate or practically useful.

Planning is crucial in making sure the task is broken down into simple steps, so the LLM can execute each accurately, vs going off-track trying to execute a larger more complex process, all at once.

Memory

Executing this might be simple, but not easy. LLMs are inherently statistical models which while great at simulating simple logical rules, are not too good at chaining them. Hence the need for Memory! Given our continuous quest to achieve AGI and have LLMs mimic human brains, let’s lean on Lilian Weng’s fantastic blog for categorizing the various types:

The sensory memory component may be more apt for when we discuss multimodality, but in essence here is Lilian’s view on how to map it: “Sensory memory as learning embedding representations for raw inputs, including text, image or other modalities”

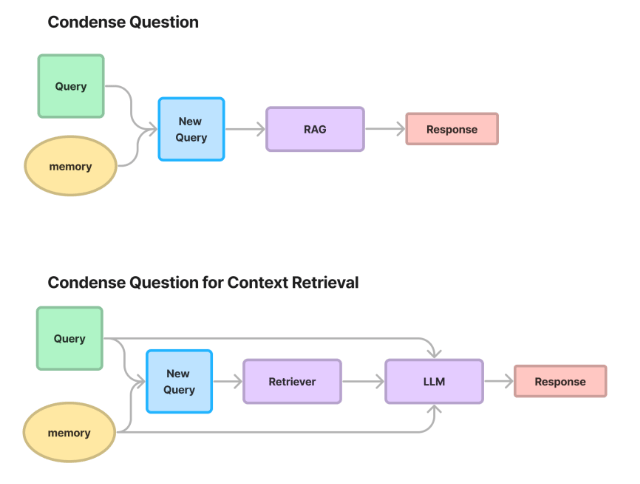

Short term memory: What is crucial in agentic workflows is conversation memory. This is mostly short term in nature, and fed to the LLM via in-context prompting for executing more complex learning and reasoning tasks.

Long term memory: Given the limitations with short term memory specifically in terms of LLMs’ context length (although less and less so now) and more generally on capacity on processing information (Miller, 1956), external vector databases can be extremely useful for fast retrieval at time of query.

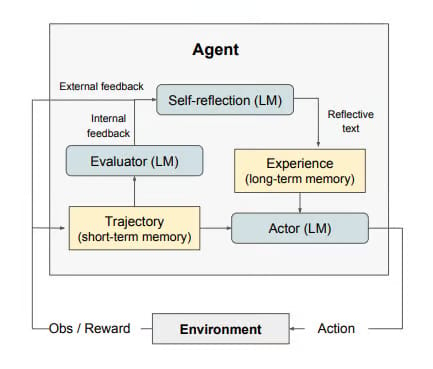

Both short and long term memory serve to help provide context for an otherwise stateless Q&A pipeline. We saw a good example of this in the Reflexion paper we discussed in the previous post wrt Trajectory (in-context) and Experience (persistent).

Tool Use

So, we have now planned our multi-turn task execution using techniques such as parallelizable sub-queries, task decomposition and planning with feedback. We have overcome the stateless nature by adding a personalized memory and made the process reflective. The only core component missing now is giving our agent the capability to interact with the external environment ie Tool Use!

Being able to use tools has been one of the most distinguishable characteristic of humans - it is no wonder that giving LLMs that ability increases their capabilities manifold as they can now interact with external APIs.

So, instead of passing through a single shot query through your RAG pipeline, what if the LLM were to infer the various parameters for the API interface and execute tasks?

To infer these parameters, LLMs could either be empowered with functions (ref OpenAI’s Function Calling), or could be provided the API spec directly (ie access to API documentation).

And yes, for those who remember, ChatGPT Plugins (RIP!) was a step in this direction early on as well, being different from function calling in that other developers would provide the tool APIs vs having to define it yourself.

Various papers and techniques have informed the tool use capabilities for LLMs over the last couple of years, a couple of notable ones being:

MRKL

MRKL (Modular Reasoning, Knowledge and Language) is a flexible architecture with multiple neural models, complemented by discrete knowledge and reasoning modules. This architecture basically comprises a number of expert modules and the LLM works as a router to direct the query to the most suitable expert for the best response. These expert models could be either:

Neural, including the general-purpose huge language model as well as other smaller, specialized LMs.

Symbolic, for example a math calculator, a currency converter or an API call to a database

Toolformer

This paper hypothesized that LLMs can teach themselves to use external tools, trained to decide which APIs to call, when to call them, what arguments to pass, and how to best incorporate the results into future token prediction.

A pre-trained LLM was asked to annotate a dataset using few-shot learning for API usage, filter annotations in a self-supervised way and then was fine-tuned on this annotated dataset. While not meant for multi-step flows, it was a great starting point for its extension into agentic workflows.

Tool Augmented Language Models (TALM)

TALM demonstrated that language models can be augmented with tools via a text-to-text API and an iterative self-play technique to bootstrap tool-augmented datasets and subsequent tool-augmented model performance, from few labeled examples.

Here, the LLM generates a tool input text based on the task input and invokes a tool API. The result is then appended to the text sequence. The iterative self-play involves using this result to iteratively expand the dataset, similar to what we saw in Toolformer earlier.

Gorilla

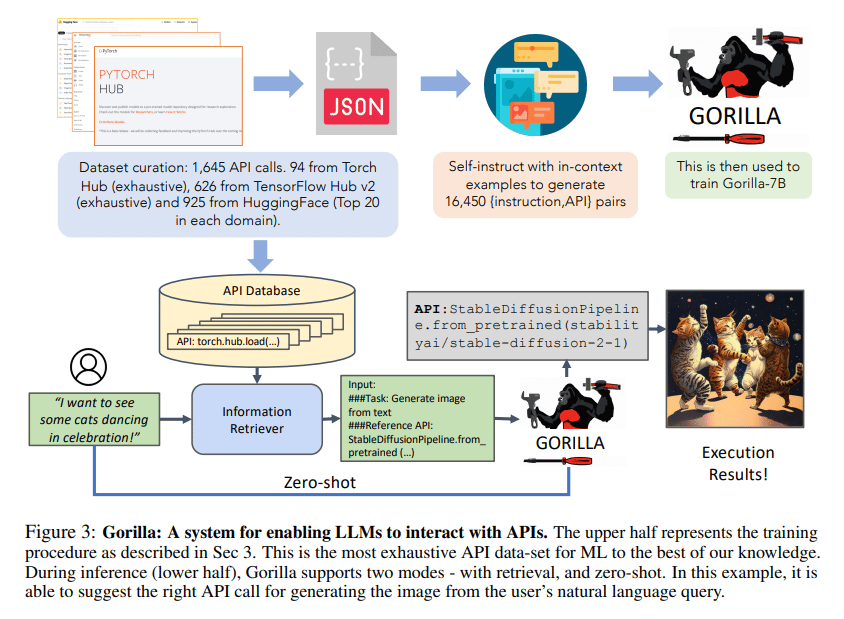

While having the LLM generate API parameters in theory sounds great, the problem of LLMs hallucinating input arguments and making the wrong API call is unfortunately all too common. Also, as we build production grade application with hundreds of functions and tools, we may run into limitations with context window or the “needle-in-the-haystack” problem. Borrowing from RAG’s best practices for retrieval using heuristics and using self-instruct fine-tuning, Gorilla has been shown to outperform GPT4 for accurate API calls.

Efficient Tool Use with Chain-of-Abstraction Reasoning

Extending Toolformer based approach to multi-step reasoning requires a more holistic planning - enter Chain-of-Abstraction (CoA)! Here, the LLM is fine-tuned to generate a multi-step reasoning chain with abstract placeholders, followed by calling domain-specific tools to reify these placeholders. This has been shown to not only help LLMs learn more general reasoning strategies, but also perform decoding and parallel tool calling, making the inference speed 1.4x better.

It seems a long time ago, when OpenAI released Function Calling. As other model providers have caught up, we are seeing them incorporate the ability to use external tools explicitly:

Anthropic announced the tool use capability in April and following up with additional tools yesterday:

Source: Anthropic

Cohere’s Command R and R+: Trained to interact with a variety of external tools with details on how it excels with multi-step tool use

…and so on!

Such is the proliferation of function calling/tool use now, that we have a leaderboard for function calling, you can check it out here, with so many models announced recently, this is something to track over time:

To round it off, the following post from Jerry Liu is a good mental model to think about the various levels of Agents, when designing your workflows. This is especially important so as to introduce complexity as and when needed for each use case. For example, if a simple tool use may suffice for a use case, there is no need to build out multi-agent collaboration (more on that soon).

The importance of agentic design and application cannot be overstated as we look to build out LLM powered workflows for real world use cases. With cheaper, better and faster multimodal models equipped with larger and larger context windows being released what seems like everyday, it is an exciting time to be building. 2024 seems like the year we see AI in production, powered by agents!

Until next time, Happy reading!