Inspired by LlamaIndex’s recent tutorial series on Agents and having recently discussed flow engineering, we need to look into Agents a little more deeply and what they mean in the world of LLMs.

There was a lot of excitement in early 2023 around agents and how they will lead to mass automation (think AutoGPT, BabyAGI and the likes). The rest of 2023 saw a slight reset in expectations given the LLM’s capabilities and limitations as “reasoning” engines. Hence, instead of automating entire functions, what has emerged as the agentic workflow is the reasoning loop followed by tool use for task automation.

But isn’t RAG good enough (ref our Advanced RAG series)?

While RAG pipelines are great for applications that need retrieval and search, they are mostly single-shot with no memory or reflection and cannot plan or use tools for complex tasks. Hence the need for multi-turn flows and agents.

Any agentic workflows needs three core components: Planning, Memory and Tool Use! Today we shall look at the first of these:

Planning:

In the world of agents, planning is a critical step to LLMs not getting distracted or confused. This is also where we guide the LLM to think step by step ie system 2 thinking vs system 1. But LLMs are designed to be “generative”, so how do we make them reason? Let’s start with breaking down the query into sub-queries:

Parallelizable sub-queries:

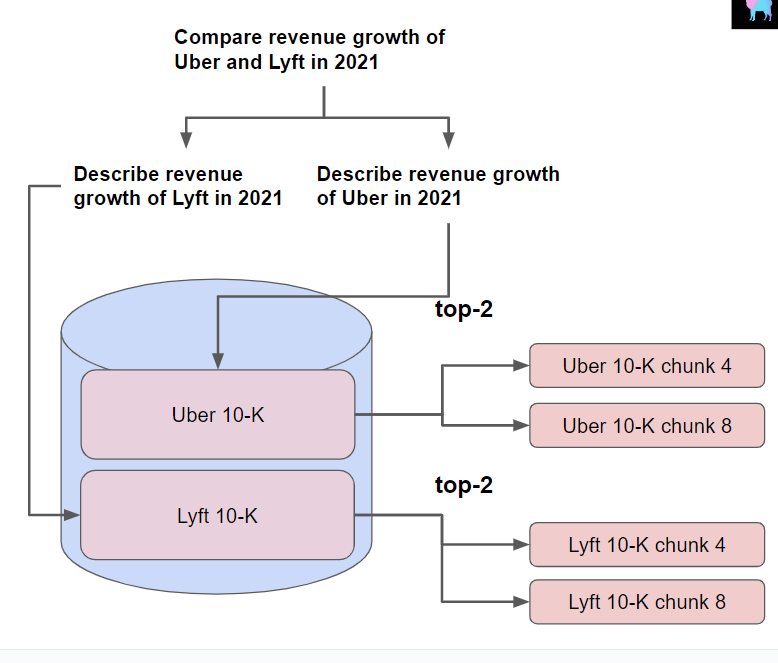

A complex query can be broken down into sub-queries, with each sub-query executable on various RAG pipelines. This is crucial for complex questions with many parts, that may require parallel workflows, with a need to synthesize the various outputs in the end, for a final result.

Task Decomposition:

From query to task decomposition: task decomposition implies multi-step flows, akin to what we discussed in our last post on Flow Engineering, notable frameworks being:

1. Chain of Thought:

Prompt engineering at its finest, asking the LLM to “think step by step” makes it decompose what may seem a complex task into multiple smaller easier tasks, leading to significant improvements in performance. This is where one can truly see the power of LLMs to “think” vs generate. CoT prompting has shown significant improvements across arithmetic, common sense and symbolic reasoning tasks.

2. Tree Based Models:

Exploring multiple alternatives at each step (branches of outcomes) during search, is widely used in planning and Reinforcement Learning algorithms, reason being the exploration (trying out new options that may lead to better outcomes in the future) - exploitation (choosing the best option based on current knowledge of the system, which could be incomplete or misleading) tradeoff. Tree based models (ToT, RAP, LATS) have hence gained popularity to improve reasoning.

Tree of Thoughts:

Take Chain of Thoughts and extend it to get Tree of Thoughts! The LLM is prompted to think step by step and then for each step is asked to again create multiple thoughts - hence the tree structure and the name. Here’s from the authors as to why ToT excels vs CoT: “ToT allows LMs to perform deliberate decision making by considering multiple different reasoning paths and self-evaluating choices to decide the next course of action, as well as looking ahead or backtracking when necessary to make global choices.”.

ToT has been shown to be more flexible to accommodate different ways to generate/evaluate thoughts, with search algorithms adapting to nature of problems.

Reasoning via Planning (RAP):

LLMs have been found to struggle with problems that may be simple for humans such as generating action plans to achieve given goals. RAP is aimed at solving for this, assuming the cause is LLMs lacking an internal world model to predict the world state and by extension the long term outcomes of actions. The Reasoning via Planning framework involves repurposing the LLM as both a world model and a reasoning agent, while also incorporating Monte Carlo Tree Search (MCTS). “During reasoning, the LLM (as agent) incrementally builds a reasoning tree under the guidance of the LLM (as world model) and rewards, and efficiently obtains a high-reward reasoning path with a proper balance between exploration vs. exploitation”

Language Agent Tree Search (LATS):

A step further from RAP, LATS leverages environmental feedback and self-reflection to adapt search and improve performance. We shall look at this again after understanding Planning with feedback below.

Planning with Feedback:

Agents are meant to be iterative, learning from their mistakes and correcting action decisions, involving multi-turn, self-reflective flows to improve reasoning. This is especially true when we take these agents to production.

1. ReAct:

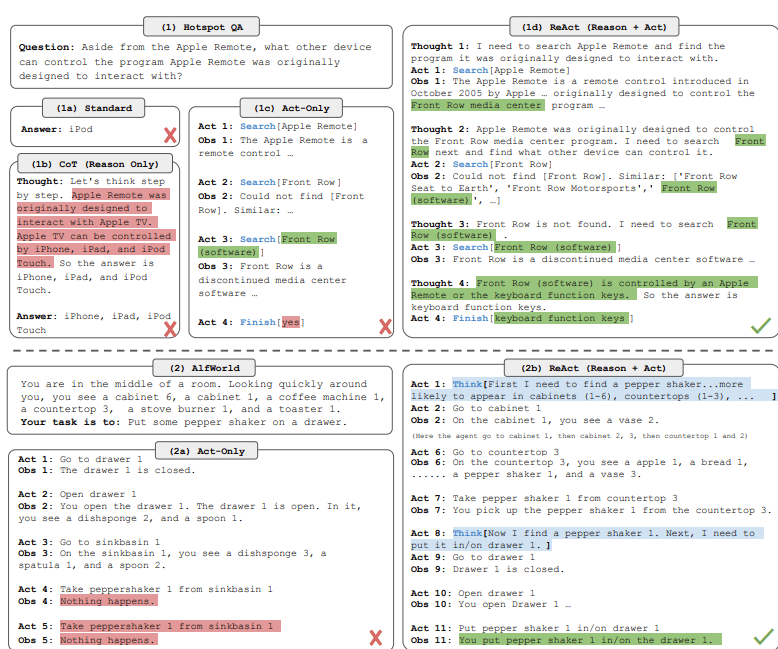

The ReAct framework combines the reasoning (generate reasoning traces using NL, think CoT) and action capabilities (use external APIs).

Adding the “Thought” step to the framework vs just “Act”, leads to significant improvements, as the LLM is prompted to repeatedly think in a specified format i.e. Thought, Action, Observation…repeat.

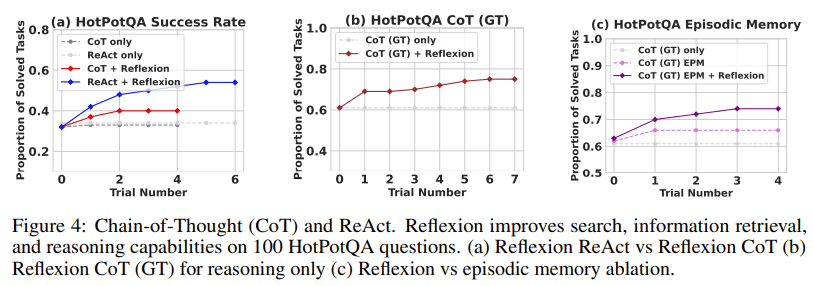

2. Reflexion:

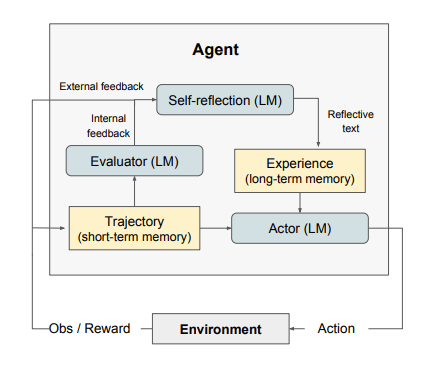

A new approach to reinforce LLMs using linguistic feedback, Reflexion aims to use the best of both self-reflection (external and internal feedback) and dynamic memory (Trajectory and Experience) to improve reasoning.

By verbally reflecting on task feedback signals (in first person) and then using an episodic memory buffer to maintain the reflective text, it improves on its decision making in the next attempt, much like we learn from our failings.

3. Language Agent Tree Search (LATS):

A tree based approach, LATS claims to improve further on the Reflexion by improving alternative choices at each step vs improving a single plan or trajectory. Like RAP, LATS also uses Monte Carlo tree Search (MCTS) to find the most promising trajectory and systemically balance exploration with exploitation, but unlike RAP which relies on internal dynamic models, LATS relies on environment interaction to facilitate simulation.

4. LLMCompiler:

While LLMs’ reasoning capabilities enable them to execute multiple function calls, it generally involves sequential ReAct framework for each function, resulting in high latency, costs and performance issues. LLMCompiler aims to solve this using three components:

LLM Planner: Formulates execution plans by generating a DAG of tasks with their interdependencies.

Task Fetching Unit: Dispatches function calling tasks in parallel to the Executor keeping in mind the interdependencies.

Executor: Executes these function calls.

How much is the improvement? “We observe consistent latency speedup of up to 3.7×, cost savings of up to 6.7×, and accuracy improvement of up to ∼9% compared to ReAct”.

Planning is a crucial step when we think about agentic workflows and hence it is important to not only focus on accuracy, but also how these multi-step, multi-turn, self reflective flows can be made more time and cost efficient.

Next we shall look at the other two components of an agentic workflow: Memory and Tool Use.

Until then…Happy Reading!