As you may remember, we have discussed LangGraph in detail in an earlier post. To recap, LangGraph is Langchain’s framework for building agentic applications. It is low level and graph based, hence highly controllable as each node and edge is a python function (no abstractions or prompts). The persistence layer enable human-in-the-loop, checkpoints and editing by going back in time, while supporting streaming, even for intermediate steps.

So, why are we talking about it again? Because things move fast and Langchain’s been shipping lately. The biggest criticism about graph based flows in general is the lack of visualization and hence observability. LangGraph Cloud and LangGraph Studio seem like a natural extension to address that, especially given the LangSmith integration. If it all sounds too confusing, I got you!

LangGraph Cloud: Deploying Agentic Applications

Let’s begin with…what is LangGraph Cloud?

“LangGraph Cloud is the easiest way to deploy LangGraph agents” - Harrison Chase

Best to lay out the key functionalities below, it being the next step from agentic workflows to agentic applications:

Assistants and threads: Assistants = configurations of a graph. Threads = Individual conversations with those assistants. Consider this similar to OpenAI Assistants.

Background runs: Great for long running asynchronous agentic jobs (see ambient agents below).

Cron jobs: Some agents need to be run on a schedule, not just triggered by users.

Double Texting: Useful when a user sends in another message before the graph has completed its run. There are 4 different ways built-in to handle this:

reject: does not allow double texting (simplest)

enqueue: wait for the run to complete, before sending the new input

interrupt: saves the work to the point and then inserts user input.

roll-back: rolls back all work down till that point.

LangGraph Studio: Debug, share and test your LangGraph agents - more on that in a moment.

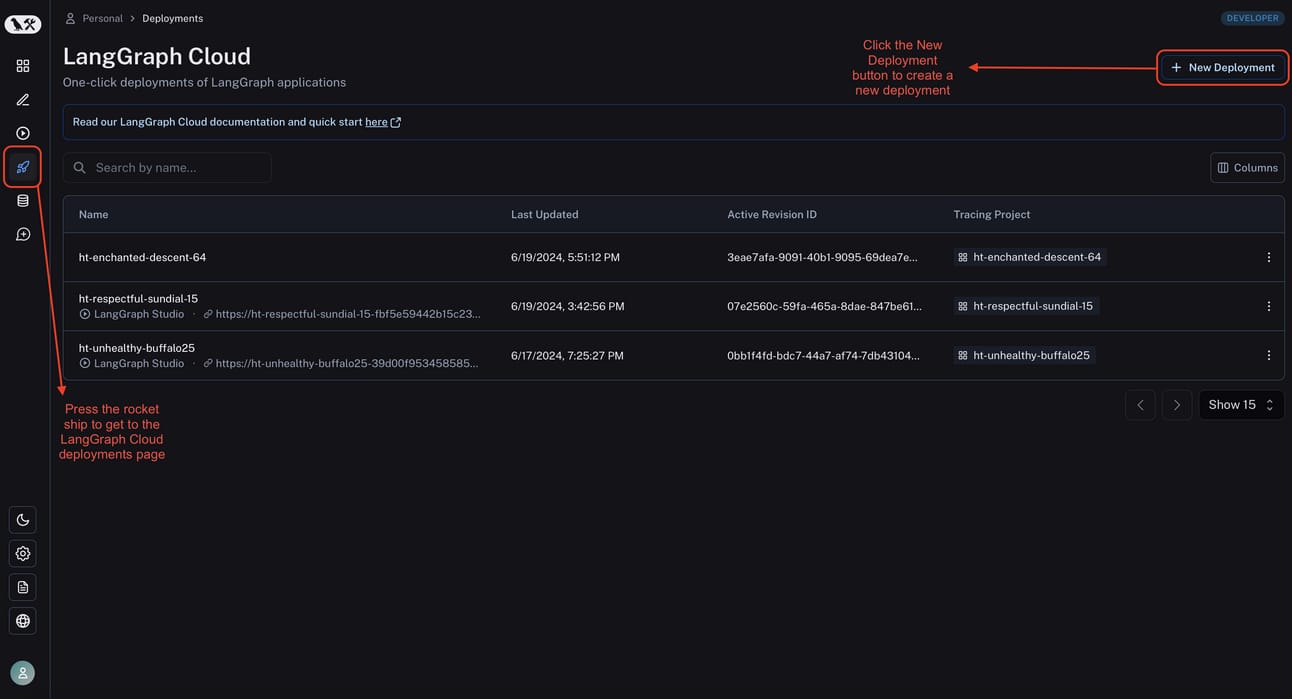

So, how does one deploy these agents to LangGraph Cloud? Using the deployment feature in LangSmith (Langchain’s observability tool). The repo is quite straightforward with only a few files. agent.py carries the graph definition ie the agent we created using LangGraph earlier, with the JSON file carrying details of how to deploy this to Cloud (dependencies and the graphs - yes can be multiple at once).

LangGraph Studio: Visualizing and Debugging AI Workflows

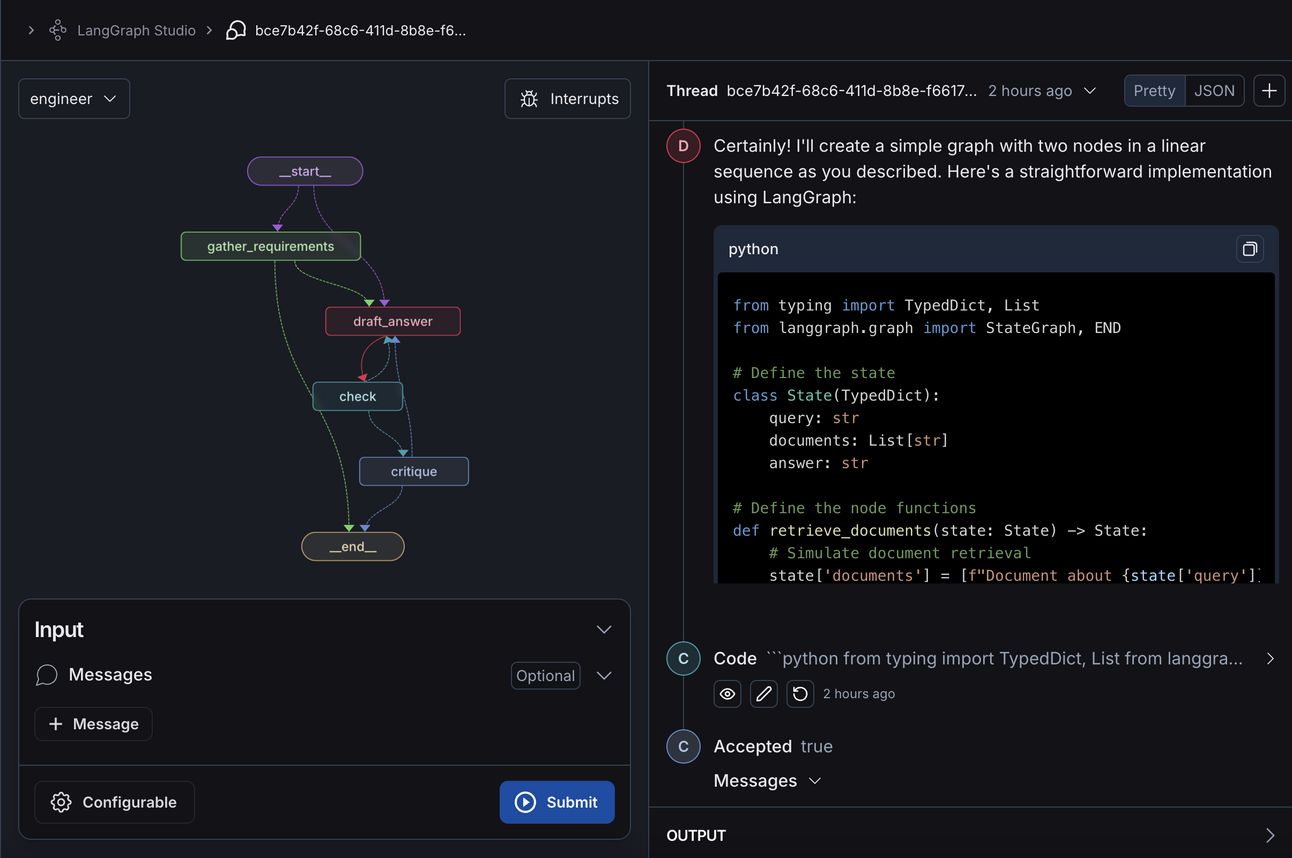

This brings us to Langchain’s latest release - LangGraph Studio! It is their specialized agent IDE that help visualize, interact and debug applications that may involve complex agentic workflows. As the name suggests, it is built on top of LangGraph and any agent deployed to LangGraph Cloud can be visualized here. Once deployed, the intermediate steps are streamed including tool calls. enabling observability and iteration.

It already has the “time-travel” features built in with an option to edit any state and fork a branch (see ambient agents). We can also add breakpoints to any or all nodes, so it asks the user permission to continue before executing any step. This can be quite powerful as Visualization + Breakpoints + Time travel = accelerated speed to deployment!

LangGraph Engineer: Bootstrapping Graph Creation

Why stop at visualizing, let’s bootstrap the graph creation! Enter LangGraph Engineer to build scaffolding for your LangGraph app. It tries to create the nodes and edges by asking questions (much like an Engineer) and once it has enough information, it writes a draft, run programmatic checks against it, have an LLM critique it and then decide whether to edit or continue to end.

The roadmap here is quite clear, expect the next steps to be the ability to generate code for the suggested nodes and edges, and then run this code within the IDE: an end-to-end workflow creation!

The UX Frontier: Evolving from frameworks to UX!

“Hindsight is 20/20”

Now that we have the deployment figured out, the big question becomes - what is the right UX for these workflows? If we believe that personalized UX is the future, then apart from memory, UX for AI is the big unsolved puzzle - a lot has been tried and we don’t have a clear winner yet.

Highly recommend the recent UX related series on this by Harrison Chase, exploring the various forms we have seen…yet!

Chat-based UX:

The most obvious UX - Chat!

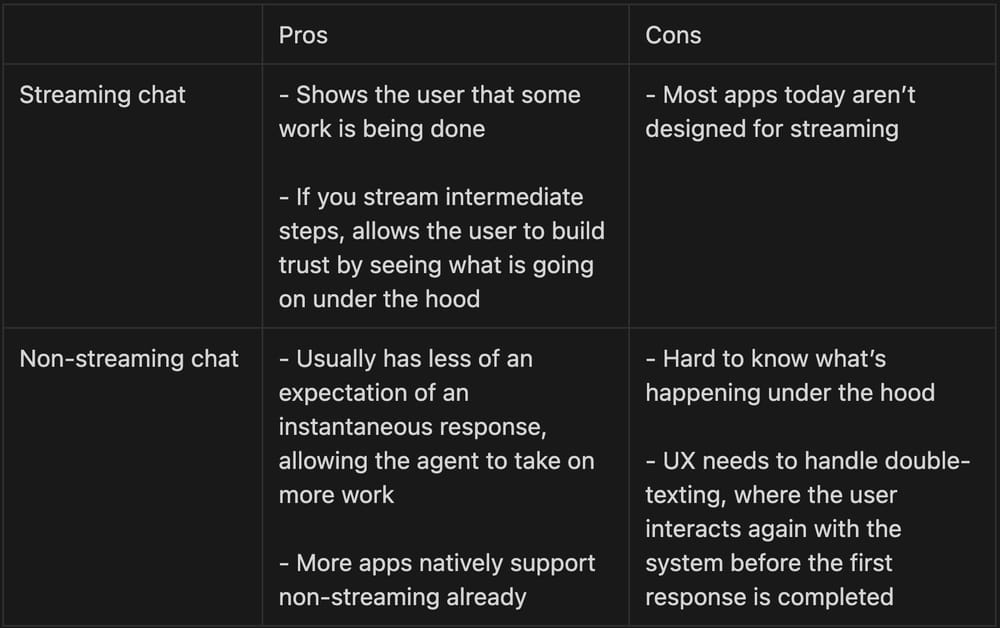

The most non-obvious insight: While streaming is table-stakes in AI apps, maybe it is ok not to stream? If we do not expect our friends to reply to our text instantaneously, maybe it is ok not to expect it of apps too, most of which support non-streaming natively. This demands trust, but enables longer, more agentic tasks. A contrary bold opinion, time will tell.

The question one should also ask is whether one needs a chatbot? AI for AI’s sake doesn’t always lead to great UX (LinkedIn’s attempt to chat with a post seems like a solution looking for a non-existent problem for example). On the other hand, customer support has been a fantastic fit for these kind of chat based solutions.

Ambient Agents:

Call me biased, but this is my personal favorite. I have been fascinated by the idea of agents working in the background (Human-on-the-loop) vs Human-in-the-loop. For multiple agents working simultaneously on working tasks to happen, we need the user to be able to see all the steps and “correct the agent”. The user should be able to either tell the agent where it went wrong so it can improve itself, or just tell it what to do from the point it made the mistake. We saw this via checkpoints in the LangGraph implementation earlier. Devin is another example of such UX.

Other UX examples:

Spreadsheet based: From AI native tools (example, Matrices) to AI enriched workflows (Clay and Otto), this is the most intuitive UX for everyone already living in spreadsheets. We are trained on Rows and Columns by default, so why shouldn’t AI be used the same way? Old habits die hard after all.

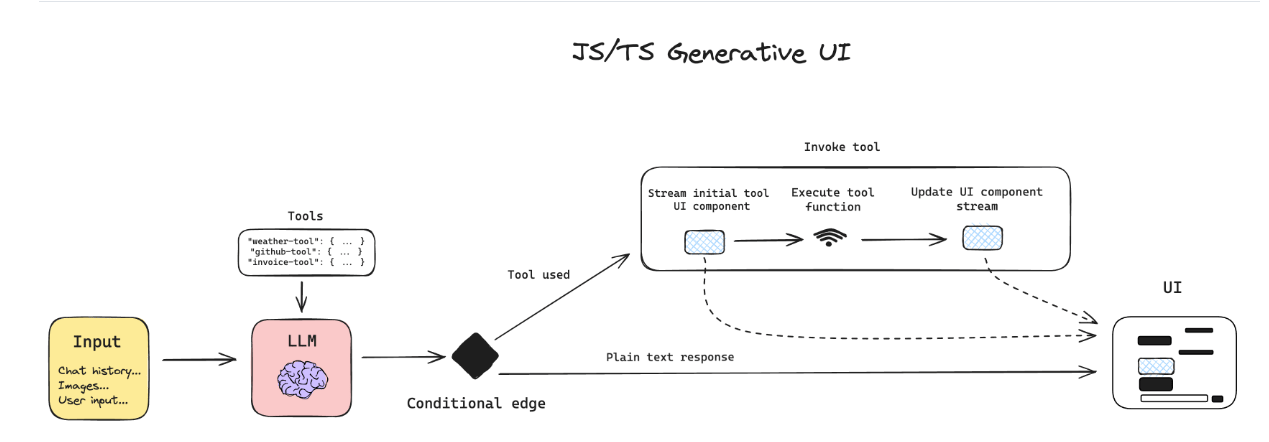

Generative UI: Websim is an example of truly fluid UI generation on the fly, albeit can feel quite rudimentary - although a fantastic starting point for brainstorming ideas. v0 by Vercel is an example of a more polished solution in this prompt based dynamic UI generation space, still feels a lot like the first iteration of something that can be so much more powerful for the non-technical users!

The other kind of Generative UI seen quite prominently is where we have fixed UI components that can be rendered as needed. While not truly generative, this does help keep more control on the quality of UI elements.

Collaborative UX: Another way to think of AI agents is as collaborators or as part of a collaborative efforts. I hadn’t heard of the Patchwork research project by Ink and Switch before, but it looks very interesting for developing universal version control tools to level-up work quality - highly recommended reading!

Worthy of mention are also Anthropic’s Artifacts, which have to an extent redefined how we think of chat based UX, with usable components with various versions as we iterate.

Another fantastic, well received UX is Composer by Cursor for collaborative AI coding. From editing multiple files to a complete folder structure for an app, it is safe to say, we are barely at the beginning of how powerful these UXs can be for rapid app development and iteration based on human feedback.

It is quite obvious that we need to start thinking about frameworks and app development with UX in mind, as we take AI applications from cool demos (so 2023) to production (2024+). Make no mistake, in a world where foundation models get stronger, cheaper, faster and commoditized, we will need to rethink our moats. Based on where we are, Observability (trust), memory and personalized UX seem like the next frontiers to me, what say you?

Until next time, Happy reading!