It cannot be overstated as to how important agents are in today’s application layer environments. In this post, let’s go deeper into how one can orchestrate such a workflow using Langgraph (refer to our previous discussion on Multi-Agent Collaboration Frameworks for the overview).

We shall refer extensively to the recent course by Langchain at Deeplearning and I highly recommend checking it out, if you haven’t already: AI Agents in Langgraph

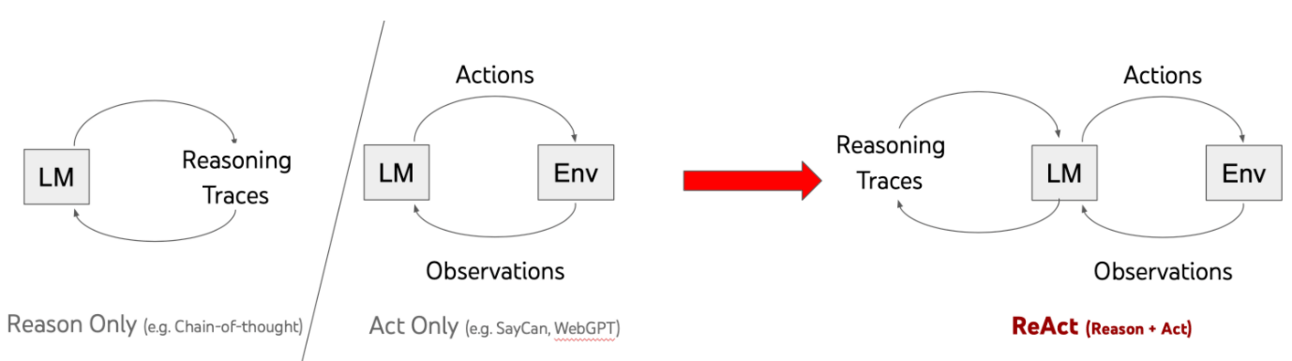

Alright, back to it. You may remember ReAct (Reason + Act) paper from earlier. This is the basis of building an agent from scratch to make sure the LLM “thinks” before “acting”. The loop we want an LLM to work through is Thought- Action-Reason and repeat, till it decides that it is done.

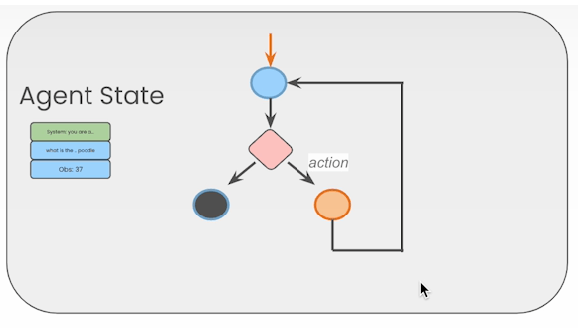

With ReAct as the basis, we can try to create a simple cyclical graph with function calling using Langgraph. In the below graph, the user prompt is sent to the LLM for an initial "thought” which triggers either an “action” (tool use) or is returned as the final response. If the tool is called, the result is reviewed and the observation sent back to the “generation” step and the cycle repeated.

Let’s quickly define the components of this graph. Langgraph defines each agent or function as a node, with various nodes being connected by edges. There are conditional edges that may create branches for more complex workflows, while maintaining the Thought-Action-Observation loop.

Each such loop is defined as an Agent State. It is important to remember the characteristics of these states, as the apps in production may interact with multiple users or organizations and the architecture should accurately reflect each conversation thread at any point in time.

Accessibility: Each agent state is accessible to all nodes and edges

Local: It is local to the graph

Persistent: Each state is stored in a persistence layer, so it is possible to resume at any agent state at any point of time.

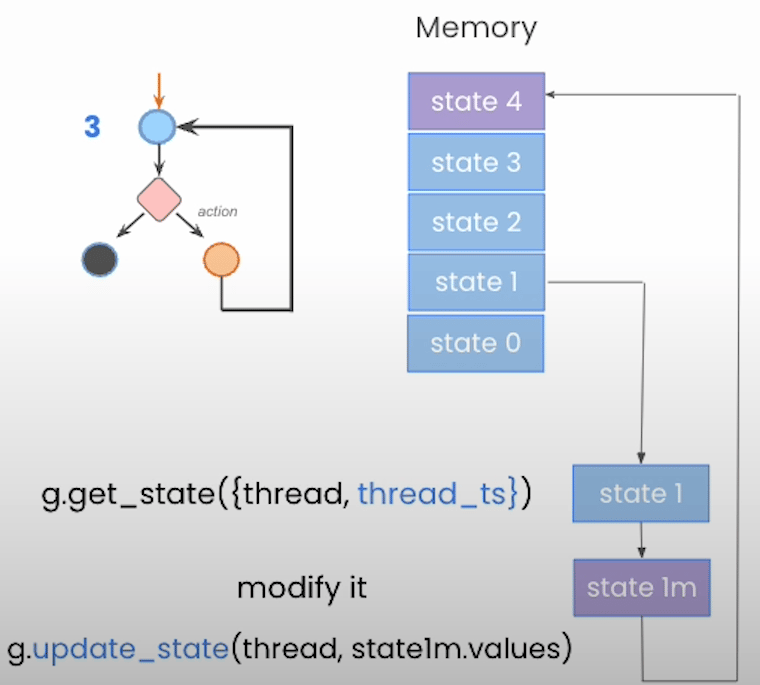

Persistence: It is important for any state to be persistent, so that it can be retrieved later. This enables things such as having memory across interactions, allowing interruptions for user inputs, using a previous state to retry or branch off from (“time travel”) and is especially useful for agents that may be long-running and hence more error prone. Langgraph uses “CheckPointers” to add memory to any graph. Below is an illustration of how this memory allows us to modify a previous state (state 1 to state 1m), leading to the state 4.

Human-in-the-Loop: The concept of an agent state combined with memory allows for various human in the loop interaction patters:

Adding a break before a node executes and ask the user to approve or deny specific actions.

Modify a state, either current or past.

Update a state manually.

These patterns empower us to review and correct a course of action taken by going back in time to a previous state before an action was executed.

This brings us a full circle to the multi-agent frameworks. Now that we understand what a state means, we can put more emphasis on understanding how multi-agent architectures can differ in the way they share this state.

Above is an example of a multi-agent architecture that shares a state. This means that all agents work on the same state, passing it around from one agent to the next. In comparison, look at the supervisor agent below which works just as it sounds. It determines what inputs should be passed to the individual agents below it. Each of these agents is a graph with its own respective state inside them, hence differing from the shared state concept.

Combine the two above and we get Hierarchical Agents. In this case, the sub-agents can themselves be teams, with each agent being a graph. As in the previous example, these agents are connected by a supervisor. So, while these agents may share a state within their flow, each agent’s state can be distinct from the other when they are connected to the supervisor. This provides a great deal of flexibility in orchestrating workflows, especially when different tasks need to be executed in parallel.

This also helps put in perspective the earlier post on Flow Engineering and how the state is shared across nodes. You may remember the AlphaCodium paper by Itamar Friedman proposing the term and its implication as “The proposed flow, is divided into two main phases: a pre-processing phase where we reason about the problem in natural language, and an iterative code generation phase where we generate, run, and fix a code solution against public and AI-generated tests.” We can now look the AlphaCodium flow as a graph with a direct pipeline in the pre-processing phase to a more iterative flow within Code Iterations. This is an example of how this concept of nodes and edges can apply to any use case with custom flows architected via graphs i.e. flow engineering!

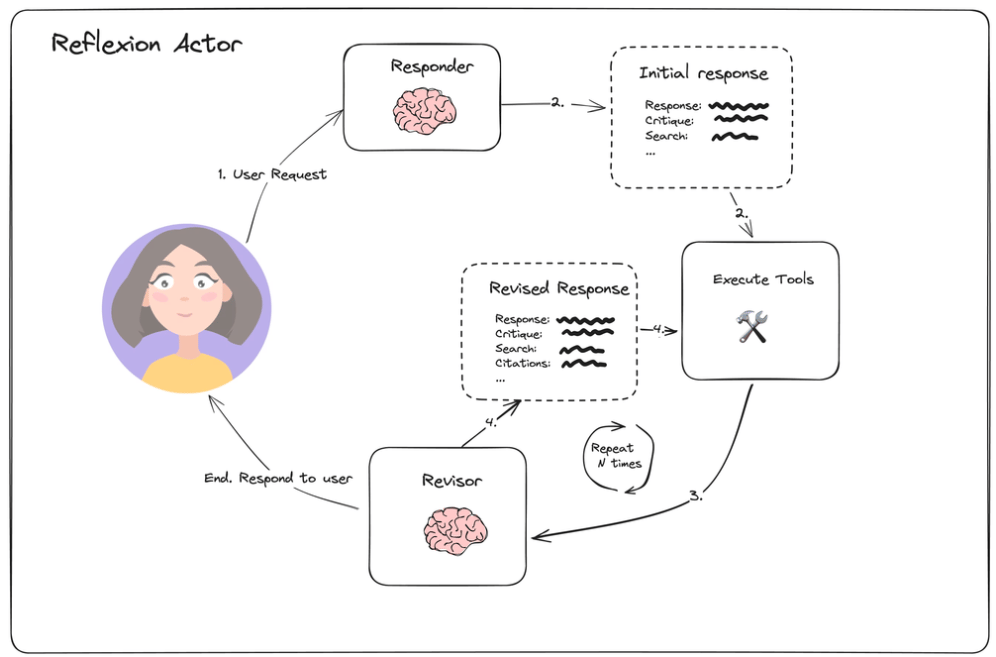

While we are on the topic of iterations, let’s revisit Reflection Agents, that utilize the graph framework for their architecture:

Plan and Execute with Reflexion: This comprises of a “Responder” and a “Revisor”. The Responder takes a user query, generates a response followed by self-reflection. The Revisor then re-responds and then reflects on previous reflections to create a better response, repeating the process a certain number of times, as defined either by the user or offloaded to the LLMs. Can you identify the state, nodes and edges? Is the state being shared?

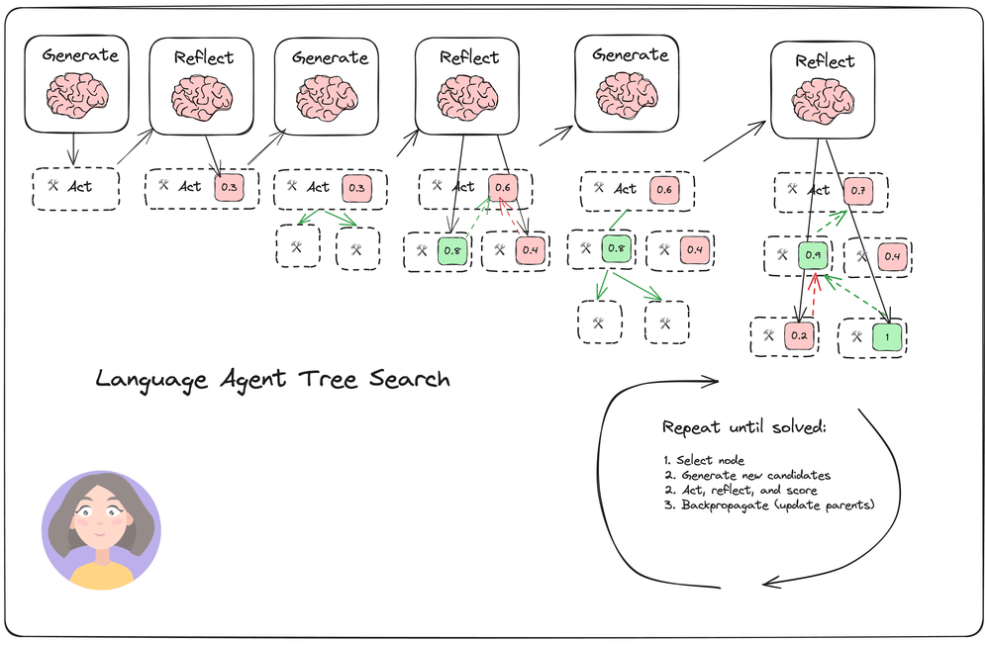

Language Agent Tree Search (LATS), as we had discussed in the earlier post on Agents. LATS claims to improve further on the Reflexion by improving alternative choices at each step vs improving a single plan or trajectory, using Monte Carlo tree Search (MCTS) and systemically balancing exploration with exploitation.

This basically is a tree search over the state of possible actions. It generates an action, then reflects, then creates more sub-actions, reflects again and so on. Throughout these reflections, it can decide which parent node it needs to backpropagate to, so as to inform future decision making based on this modified previous state.

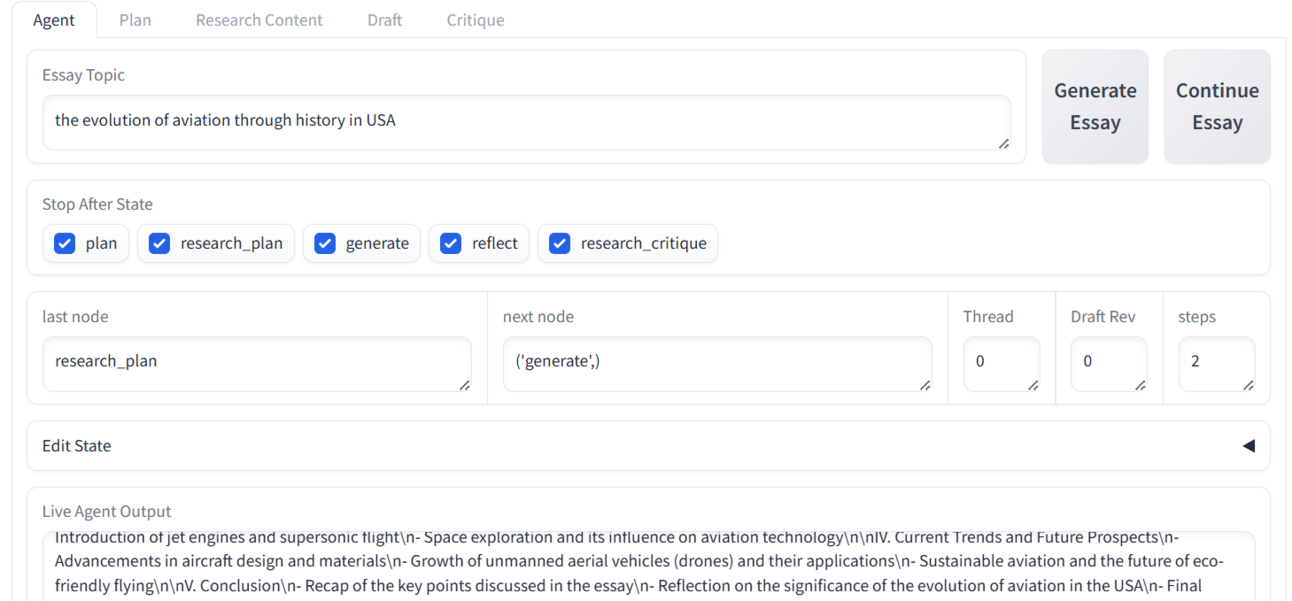

Thanks to the amazing folks at Langchain and Gradio, we can visualize these steps via a GUI. Below we can see how by interrupting the flow, which node was last and which is next. For example, in the below essay writing graph, the last node was “research_plan”, with the next being “generate”.

By clicking on the “Plan” tab, we can see what the plan looks like and will be used to generate the first draft:

When we continue, we have the first draft available with the “reflect” node being next. The first draft is then critiqued, appended and sent back to the generate node for a rerun.

This graph then iterates a number of times till it decided it is done for a final draft that is then shared as the final response. We can go back to any of these nodes and modify it to branch out for a new state, a testimony to how flexible this multi-agent architecture can be. The final draft looked something like this. Note that while it was more verbose and nuanced, by adding a more robust knowledge source and adding an advanced rag pipeline can, we make this worthy of submission vs using internet search as the only tool.

To that point, combining Advanced RAG with multi-agent collaboration and providing access to external tools and knowledge base, can lead to complex coherent applications that can be used for real world use cases. We already have the tooling to deploy production grade applications, with the right frameworks and well thought out flow engineering. The big question is how complex a workflow can be resiliently supported using stateful agents? To find the answer, Build and Evaluate - this is the way!

Until next time…Happy Reading!