The launch of Llama 3.1 has the potential to finally drive a step change in the capabilities of foundation models, given how good it is out of the box and open source - (not truly open-source by definition given the limited details on data*, except Alexander Wang (CEO - Scale AI) confirming on X that “Scale Data Foundry was utilized to generate frontier data (SFT & RLHF data) to push the performance of Llama-3.1”). Although to their credit, they did release the weights and an accompanying paper explaining in detail how the model came to be - respect!

Not a fan of legacy evals? How about Scale’s SEAL evals? Llama 3.1 slots in just below Sonnet 3.5 and above GPT-4o.

Allen AI’s Zero eval concurs:

If you were in the “fine-tuning is dead” camp, this release does inspire a rethink. Here are the results of the initial evals, courtesy Kyle Corbitt (OpenPipe) - “never been an open model this small, this good!”.

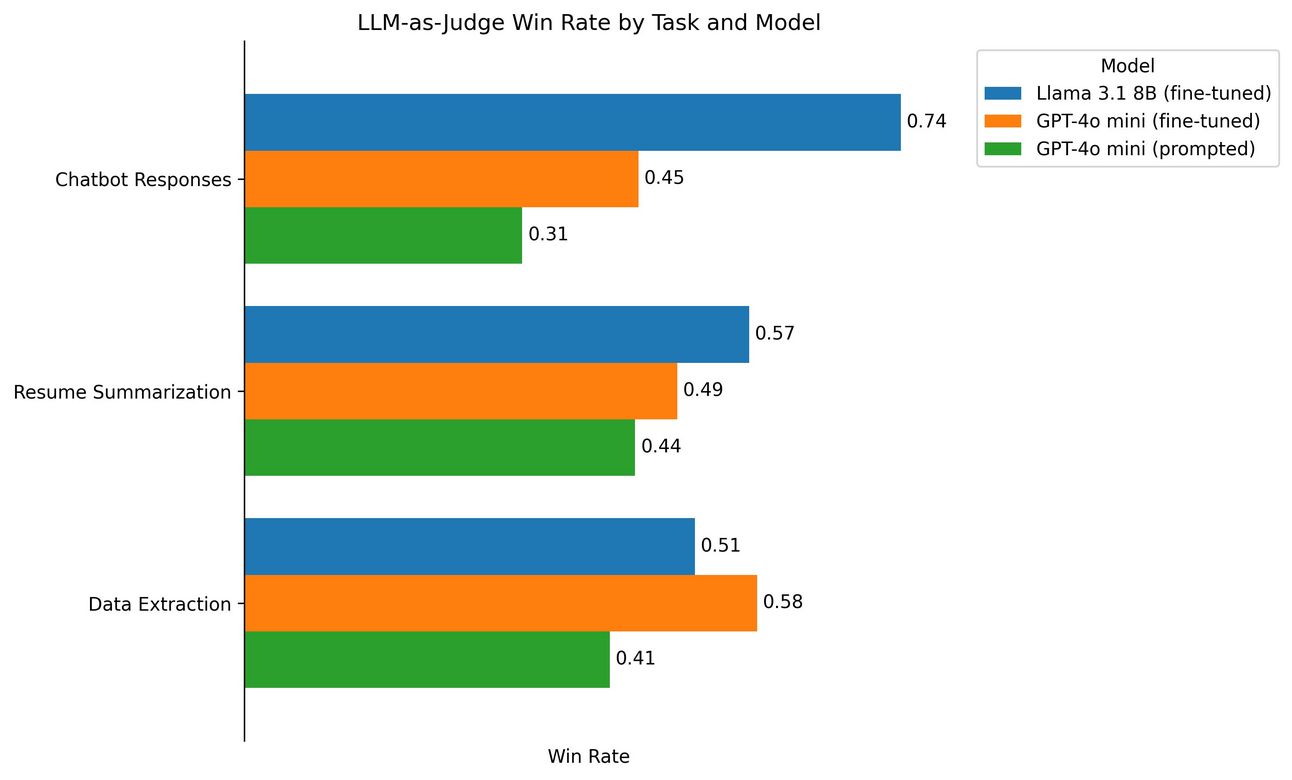

How about Llama 3.1 fine tuned vs GPT4o mini fine-tuned (yes OpenAI launched their finetuning feature for GPT4o mini feature just yesterday)? Llama 3.1 wins on chatbot responses and resume summarization, while underperforming on data extraction. Once again, huge thanks to Kyle @ Openpipe for this great work!

The kicker is the updated license: you can use Llama 3 for synthetic data generation.

Alright, so it is good, even the best when it comes to Instruction following. But how about cost? At the 405b size, the hardware surely cannot be cheap. Turns out, Fireworks blows everyone out of the water at 3/3 i/o cost pm.

But how is Fireworks doing this? It is something, to beat the competition by this margin! Obviously, we got to speculate, how about this banger from Hamel - buy AMD?

Remember the chart below from my post earlier in April? Here is the updated version by Maxime Labonne at Liquid AI. See that gap closing between open-source and closed models on the upper right - well contextualizes the significance of this release.

That should have everyone excited yes? Well not everyone - enter the EU and their AI Act on cue.

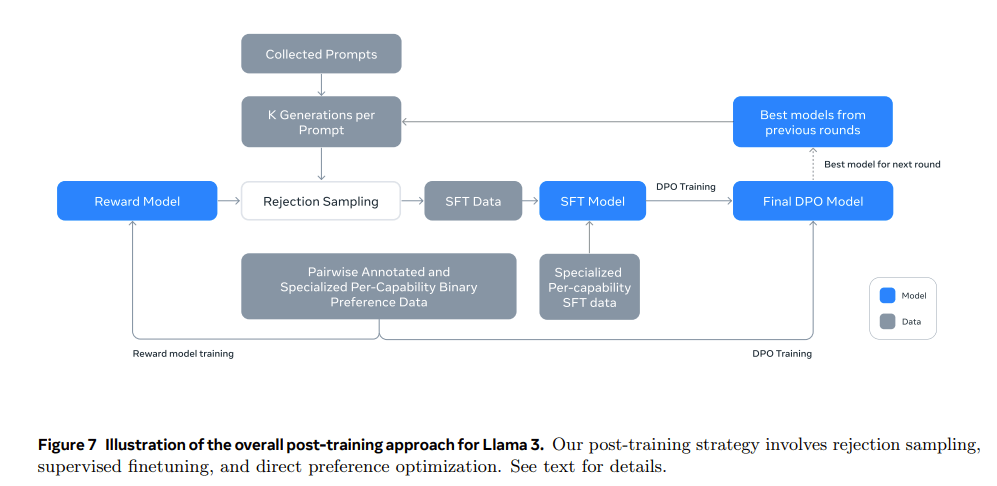

But before you pass a judgement, I highly recommend going through the 92 page paper they have released - one of the best in recent memory and extremely detailed on processes. TLDR: Supervised fin-tuning (SFT) + Synthetic Data for the win!

Notably, they have also open-sourced system level prompt-based filtering mechanisms - Prompt Guard and Code Shield. Given how good the paper is, I shall use their definitions as is:

Prompt Guard is a model-based filter designed to detect prompt attacks, which are input strings designed to subvert the intended behavior of an LLM functioning as part of an application. The model is a multi-label classifier that detects two classes of prompt attack risk - direct jailbreaks (techniques that explicitly try to override a model’s safety conditioning or system prompt) and indirect prompt injections (instances where third-party data included in a model’s context window includes instructions inadvertently executed as user commands by an LLM).

Code Shield is an example of a class of system-level protections based on providing inference-time filtering. In particular, it focuses on detecting the generation of insecure code before it might enter a downstream use case such as a production system.

There are also capabilities such as tool use and multi-step reasoning (agentic systems) showcased within the paper. I recommend exploring some of it on their github.

These are exciting times, as we can now see a future where frontier foundation models will no more be owned by a few, maybe fine-tuning is not dead after all and maybe Zuck means every word of his essay (also a great read). If audio/video is your thing, here is his interview with Rowan Cheung.

The pace at which AI is moving can be overwhelming and there is always a feeling of not having enough time. But do not worry, I got you!

If you have reached this far and have not subscribed, please do. If you like what you read, please tell others - knowledge shared is knowledge gained!

Also, feel free share your thoughts and ideas in the comments section or you can reach me at:

LinkedIn: Divyanshu Dixit

Until next time, Happy Reading!