re·triev·al (noun): “the process of getting something back from somewhere.”

Having done the hard work of Indexing the data, next is the step of fetching the “relevant data” based on the user query (refer query translation, routing and query construction).

The most common and straightforward way of doing this is by identifying and fetching the chunks that are most semantically similar to the user query, from previously indexed data (nearest neighbor). This looks something like the below in the vector space:

As is now evident, retrieval is not just one step, but a series of steps starting with query transformation, followed with Indexing to now improving the quality of retrieval after fetching the relevant chunks. If your question is why, let’s assume a specific case. Assume we have retrieved top k chunks based on the similarity search on the vector database, but most of them are repetitive or do not fit the context window of the LLM we are using. We will need to improve the quality of this context by applying some post retrieval techniques, before presenting it to the LLM.

Continuing on the theme of there being no one correct way of doing things in the realm of LLMs, the most appropriate technique depends on the use case and the nature of the chunks. Here are some of the most prominent ones:

Ranking

Reranking:

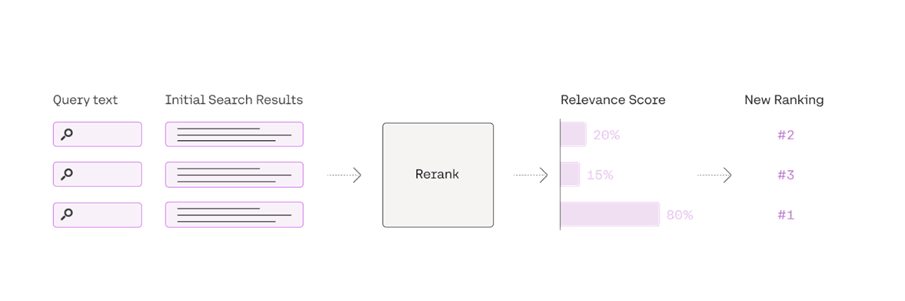

If we are looking to find an answer that may be embedded in a specific chunk within our database, Reranking can be an effective strategy to provide the most relevant context to the LLM. There are various ways to do this:

Increasing Diversity: The most commonly used method for this is Maximum Marginal Relevance (MMR). This is achieved by factoring in a) similarity to the query and b) distance to already selected documents.

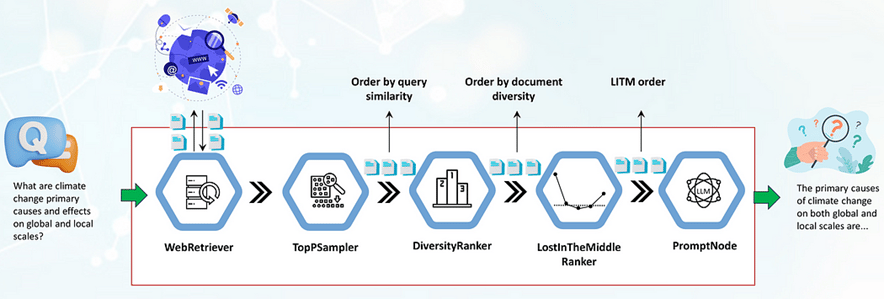

The DiversityRanker by Haystack is worth mentioning here. It uses sentence transformers to calculate similarity between documents. It starts by choosing the semantically closest document, followed by the “document that is, on average, least similar to the already selected documents.”, and so on, visually represented below:LostInTheMiddleReranker: Another one by Haystack to get around the issue of LLMs not being great at retrieving information from the middle of a document. It does so by reranking so that the best documents are place at the beginning and end of the context window. The recommendation is to use this reranker as the last, after relevance and diversity.

Cohere Rerank: This is provided via Cohere’s Rerank endpoint, whereby the initial search results are scored by comparing them to the user query. This helps recalculate results based on semantic similarity between query text and documents rather than just relying on vector-based searches.

bge-rerank: In addition to evaluating which embedding model works best for your data, it is crucial to also see which retriever may work best for optimal performance with that embedding model. We can use Hit Rate and Mean Reciprocal Rank (MRR) as our evaluation metrics for retrieval. For quick reference, Hit rate is how often the correct answer is found in the top-k retrieved chunks and MRR is where the most relevant document is placed in the ranking. As we can see below, bge-rerank-large or cohere-reranker used with JinaAI-Base embeddings seems to fare quite well for this particular dataset. One thing to note in the below table, embedding models are not great rerankers, generally speaking. Jina being the best at embedding for example, and bge-reranker would be so for reranking.

mxbai-rerank-v1: I would be remiss not to mention the latest reranking models to have been released with a claim to being SOTA, and fully open source from the Mixedbread team. The performance is claimed to be better than both Cohere and bge-large - definitely worth exploring!

This is one of the first methods to effectively use off-the-shelf LLMs such as GPT3.5 to rerank retrieved documents, and do it better than Cohere reranker. To get around the issue of retrieved context being larger than the LLM’s context window, this method utilizes a “sliding window” strategy, which as it suggests progressively ranks chunks within a sliding window. This approach is shown to beat most others including Cohere rerank. The caveat here is the latency and costs that LLMs tend to introduce into a process, so it is worth tinkering with smaller open source models for optimal performance.

Prompt Compression:

This seems like the right place to introduce the concept of Prompt Compression, as it ties in quite closely with Reranking. This technique is a way of reducing the noise in the retrieved documents by “compressing” irrelevant information (ie irrelevant to the user query). A few approaches within this framework are:

LongLLMLingua: This approach is based on the Selective-Context and LLMLingua frameworks, which are the SOTA methods for prompt compression, thereby optimized for both cost and latency. Further to these, LongLLMLingua improves retrieval quality by using a “question-aware coarse-to-fine compression method, a document reordering mechanism, dynamic compression ratios, and a post-compression subsequence recovery strategy to improve LLMs’ perception of the key information”. This has been found to be particularly useful with long context documents, where the LLM struggles with the “Lost in the middle” problem.

RECOMP: Using “compressors”, this method proposes using textual summaries as context for the LLMs to reduce the cost and improve the quality of inference. The two compressors presented are a) “extractive compressor”: selects the relevant sentences from retrieved documents and b) “abstractive compressor”: creates summaries from synthesized information from multiple documents.

Walking Down the Memory Maze: This method introduces the concept of MEMWALKER, whereby the context is first processed into a tree format, by chunking the context and then summarizing each segment. To answer a query, this model navigates the tree structure with iterative prompting, to find the segment that contains the answer to the specific question. This method has been shown to particularly outperform on longer sequences.

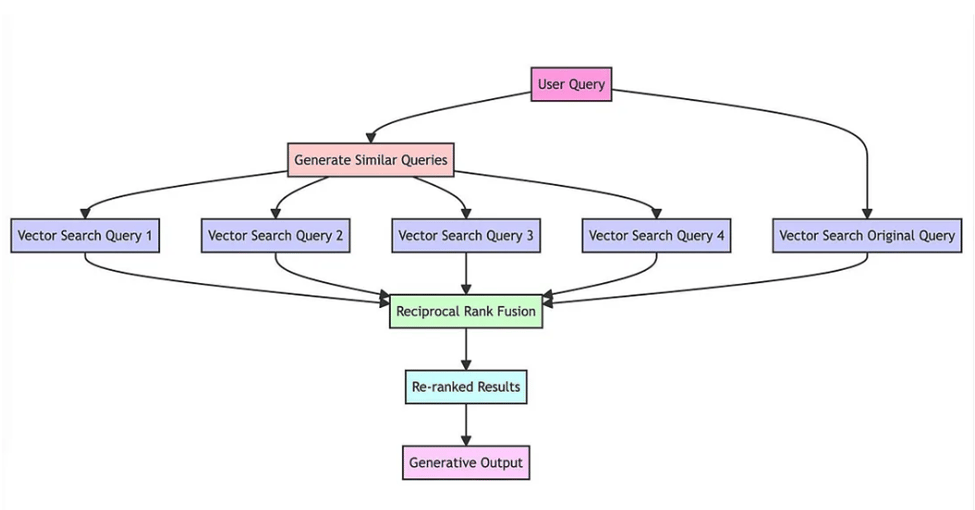

RAG-fusion:

This is an approach introduced by Adrian Raudaschl, using Reciprocal Rank Fusion (RRF) and Generated Queries to improve retrieval quality. The way it does this is by using an LLM to generate multiple user query based on the input, running vector searches for all these queries and then aggregating and refining results based on RRF. As the final step, the LLM uses the queries and the reranked list to then generate a final output. This method has been shown to have better depth when responding to queries, hence has been quite popular.

Refinement

CRAG (Corrective Retrieval Augmented Generation):

This method aims to address the limitations around sub-optimal retrieval from static and limited data, using a lightweight retrieval evaluator and then using external data sources (such as web search) to supplement the final generation step. The retrieval evaluator estimates a confidence degree by evaluating the retrieved documents vs the input, which then triggers the downstream knowledge actions.

The reported results are impressive and indicate a significant improvement in performance in RAG based approaches:

FLARE (Forward Looking Active Retrieval):

We shall end this post with some FLARE. This method is particularly useful when generating long-form text, as it generates a temporary next sentence and decided to perform retrieval if it contains low-probability tokens. This can be extremely powerful to decide when to retrieve, thereby reducing hallucinations and factually incorrect output.

So there we have it! While retrieval can be tricky given the number of variables to optimize for, when done right by mapping to the use case and data type, it can lead to significant improvements in cost saving, latency and accuracy.

Next, we shall look at Generation and try to wrap our heads around some evaluation strategies. Until then, happy reading!