Back on it after the short summer break and Microsoft open-sourcing their GraphRAG paper is all the rage. This is crucial for 2 reasons: firstly, I have been wanting to write about knowledge graphs for a while now and secondly, it is pretty cool that we can bring the power of Knowledge Graphs to RAG, despite some compute related downside - more on that later.

What is a Knowledge Graph then? Ever feel like your brain is a tangled mess of random facts? Knowledge graph is the digital equivalent of our mind, without the forgetfulness and periodic erroneous decision making.

We can consider them as triplets with a subject, a predicate and an object. They represent information as a network of interconnected entities and their relationships vs what initially. At their core, knowledge graphs utilize a graph-based data model where nodes represent entities (subjects and objects such as people, places, or concepts), and edges represent the relationships or predicates between these entities. Knowledge graphs are the data world's answer to the classic "six degrees of separation" game, but instead of connecting you to Kevin Bacon, they're linking every conceivable entity in a vast, multidimensional web of relationships.

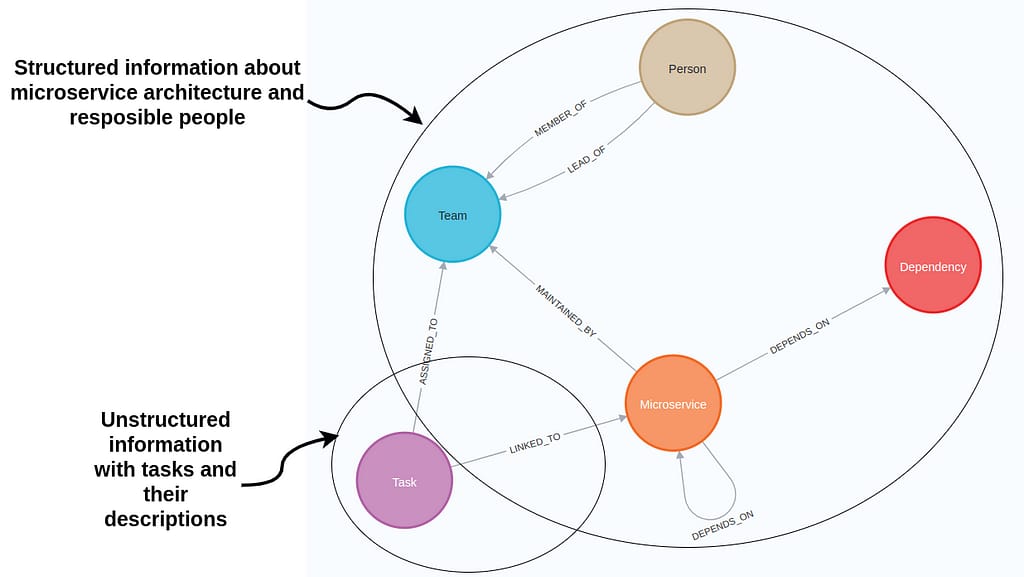

The true magic of knowledge graphs lies in their almost supernatural ability to play connect-the-dots with your data - they are flexible and extensible. They can seamlessly integrate diverse data sources, accommodating both structured and unstructured data. This integration creates a unified view of information, often revealing hidden connections and patterns. Advanced knowledge graphs incorporate ontologies and semantic schemas (big words for "really smart organization"), which provide a formal specification of concepts within a domain and their interrelationships. This semantic layer enhances the graph's capability for logical reasoning, allowing for operations like transitive closure, which can infer new relationships based on existing ones. For instance, if the graph contains information that "John lives in San Francisco" and "San Francisco is in California," it can infer that "John lives in California," even if this fact wasn't explicitly stated.

How about the use-cases then? The below three-dimensional framework of latency, persistence, and query types is a nice way to look at Knowledge graphs’ versatility across diverse use cases. In analytics, they excel at balancing ad-hoc inquiries with systematic analysis, enabling everything from real-time customer insights to complex financial modeling. Applications leverage knowledge graphs' capacity for persistent data storage and rapid retrieval, crucial in sophisticated recommendation systems and user-centric interfaces. For algorithmic purposes, they shine in their ability to handle systematic queries, facilitating advanced natural language processing and supply chain optimizations. This further underscores our earlier reference to their flexibility, being equally adept at handling ephemeral, real-time analytics and supporting enduring, systematic data structures.

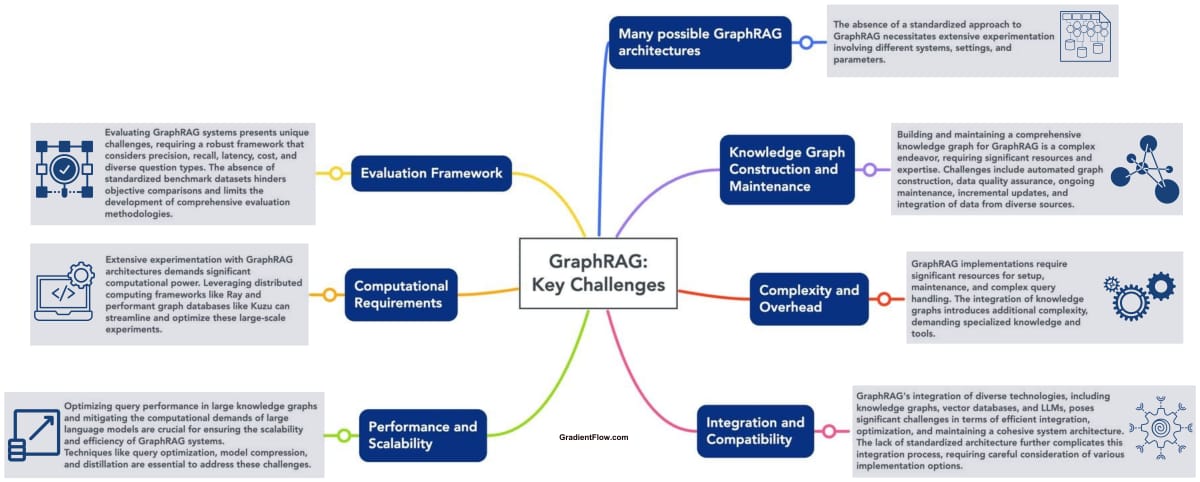

Now that we know why Knowledge Graphs were all over your feed late last year, this brings us to today, where GraphRAG is the way! Microsoft Research announced GraphRAG back in April this year and was well received. They have now open sourced the code, which has gotten everyone sit up and take notice.

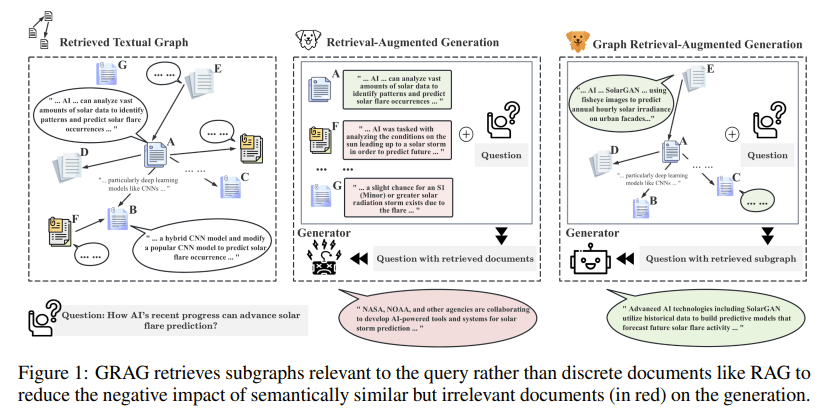

What exactly is GraphRAG (GRAG) then? The GraphRAG paper is a good place to start:

Isn’t that some technical stuff that could do with some analogies. So here is one, born out of a brainstorming session with Sonnet 3.5:

Let’s say GRAG is the best urban planner of the information world. While traditional RAG methods are like tourists asking random pedestrians for directions, GRAG is the mayor who knows every nook and cranny of the city. It doesn't just look at individual buildings (or pieces of information); it sees the entire cityscape, keeping in mind the intricate network of streets, neighborhoods, and communities. This bird's-eye view allows GRAG to navigate these streets with the efficiency of a seasoned cab driver who knows all the shortcuts.

What makes GRAG impressive is its ability to identify and explore the most relevant neighborhoods (subgraphs) for any given question. Using its "soft pruning" technique (think of it as gentrification, but for irrelevant information), GRAG cleans up these neighborhoods, removing the clutter and highlighting the most relevant features. Then, with its dual prompting approach - speaking both the language of city planning (graph structure) and local dialects (textual information) - GRAG crafts responses that capture not just the facts, but the rich context and interconnections of the urban landscape. Think of this being akin to getting insider tips from a longtime resident who's also an expert historian, giving you insights way better than any tourist guidebook could offer.

Microsoft’s “From Local to Global” paper applies this to query focused summarization, with knowledge graphs being summarized in natural language for retrieval. Continuing with our analogy earlier, let’s consider GraphRAG to be a sophisticated urban planning and information retrieval system for the knowledge base aka city. This system operates in two main phases: Indexing and Retrieval.

In the Indexing phase, the city planners (LLMs) first divide the urban landscape (source documents) into manageable districts (text chunks). They then survey each district, identifying key landmarks and connections (element instances) - think of this as mapping out important buildings, parks, and the roads connecting them. These individual elements are then summarized into concise descriptions (element summaries), like creating brief profiles of each neighborhood landmark. Next, using advanced urban analysis tools (community detection algorithms like Leiden), the planners group these elements into natural communities, much like identifying distinct neighborhoods or boroughs within the city. Finally, they craft detailed "neighborhood reports" (community summaries) for each of these communities, providing a rich, multi-layered guidebook to the entire city. This indexing phase creates a comprehensive, hierarchical understanding of the urban landscape, from individual buildings to entire districts.

When it comes time for Retrieval, GraphRAG shines in handling citywide inquiries. Instead of dispatching surveyors to random locations, it consults the pre-prepared neighborhood reports. Each community contributes its local perspective to the broader question, like local town halls holding simultaneous meetings (community summaries to community answers). These local insights are then synthesized into a comprehensive city report (global answer), much like an urban planner combining feedback from various boroughs to understand citywide trends. This approach proved particularly effective for "global" questions about the entire urban landscape, outperforming traditional methods in both the breadth and diversity of insights offered.

An interesting result from the paper pointed to how crucial text chunking sizes can be. For instance, using 600 tokens resulted in more extracted entities vs using 2400 tokens (See fig above). But this comes with its trade-offs, where smaller chunks may lead to loss in context (something we discussed in our Advanced RAG series earlier).

As we have discussed a number of times previously, we are always doing a balancing act between cost, latency, privacy and accuracy when it comes to LLMs. The performance from graphs comes with a larger token count and inference time, so the adoption strongly depends on the use case and whether graph based summarization is the optimal way for it.

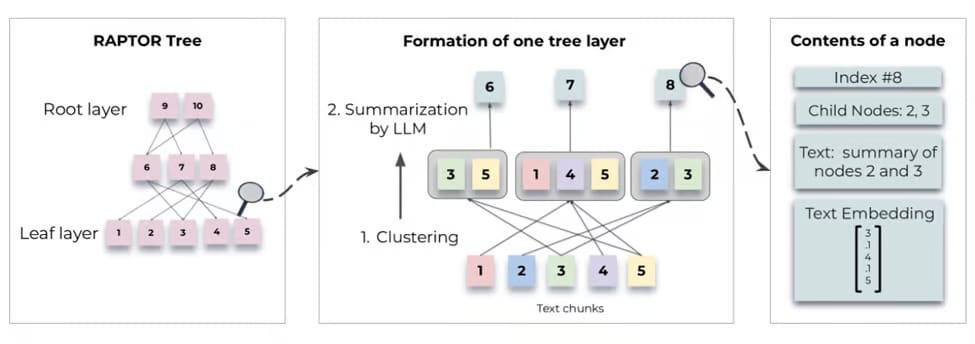

Those with a keen eye, may question how GraphRAG differs from RAPTOR, that we had discussed in my post on Indexing as part of the Advanced RAG series. As a quick recap and staying with the analogy earlier, think of Raptor as an architect designing a tall building with multiple floors. Each floor represents a different level of detail about the city:

The ground floor has very detailed information about specific locations.

As you go up, each floor provides a more summarized view, covering larger areas.

The top floor gives a bird's-eye view of the entire city.

When answering a question, Raptor moves up and down this building, gathering information from different floors as needed. It's particularly effective for complex questions that might need to combine specific details with broader context.

Key Differences - GraphRAG vs RAPTOR:

GraphRAG focuses on connections between different parts of the city (relationships between entities), while Raptor organizes information in levels of increasing abstraction.

GraphRAG is best for questions needing a comprehensive view of the whole city, showing how everything interrelates. Raptor excels at questions that require piecing together specific details from different parts of the city while maintaining broader context.

GraphRAG's strength is in showing the diversity and interconnectedness of the city. Raptor's strength is in efficiently navigating between detailed and summary information.

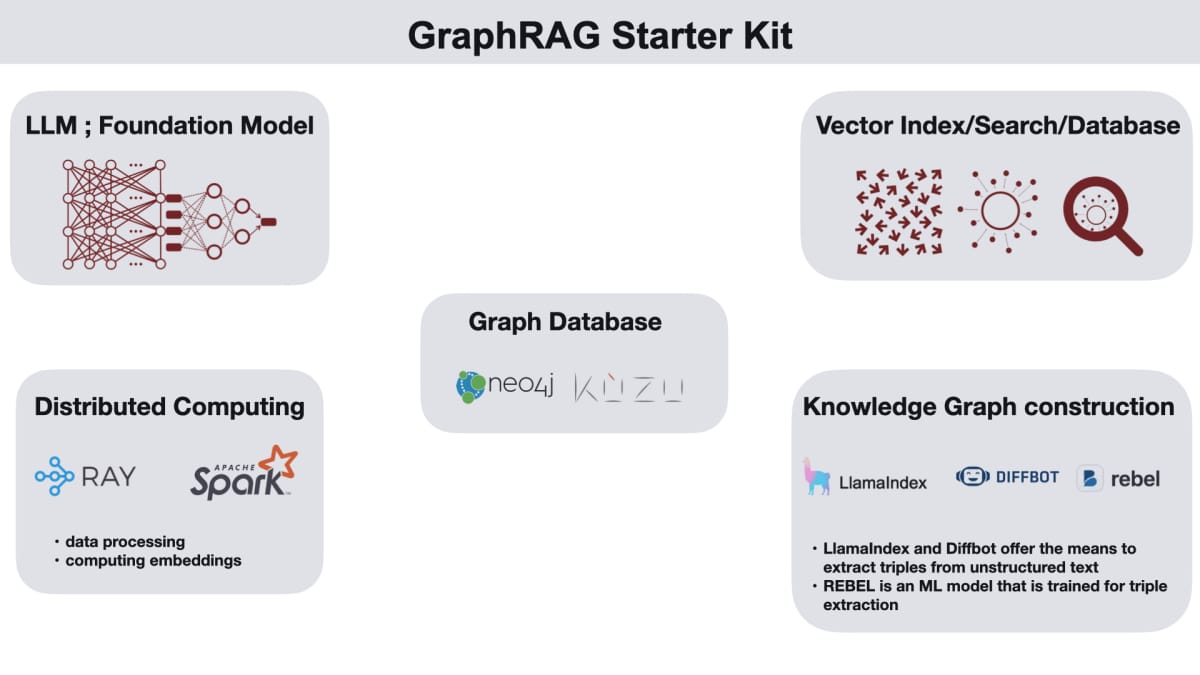

So suppose, after all the deliberation and trial runs, we have decided GraphRAG is the way, where does one begin? I got you: following is a decent starter kit for the uninitiated:

Better still, with the GraphRAG code now open sourced, !pip install graphrag.

Until next time, happy reading!

PS: If you have made it this far, I would love to hear from you. Please share your thoughts and ideas in the comments section or you can find me at:

LinkedIn: Divyanshu Dixit